Posts: 218 +7

Selling like hotcakes: The extraordinary demand for Blackwell GPUs illustrates the need for robust, energy-efficient processors as companies race to implement more sophisticated AI models and applications. The coming months will be critical to Nvidia as the company works to ramp up production and meet the overwhelming requests for its latest product.

Nvidia's latest Blackwell GPUs are experiencing unprecedented demand, with the company reporting that it has sold out of these next-gen processors. Nvidia CEO Jensen Huang revealed the news during an investors meeting hosted by Morgan Stanley. Morgan Stanley Analyst Joe Moore notes that Nvidia executives disclosed that their Blackwell GPU products have a 12-month backlog, echoing a similar situation with Hopper GPUs several quarters ago.

The overwhelming demand for Blackwell GPUs comes from Nvidia's traditional customers, including major tech giants like AWS, CoreWeave, Google, Meta, Microsoft, and Oracle. These companies have purchased every Blackwell GPU Nvidia and its manufacturing partner TSMC can produce for the next four quarters. The extreme demand indicates that Nvidia's already considerable footprint in the AI processor market should continue to grow next year, even as competition from rivals such as AMD, Intel, and various cloud service providers grab their share.

"Our view continues to be that Nvidia is likely to gain share of AI processors in 2025, as the biggest users of custom silicon are seeing very steep ramps with Nvidia solutions next year," Moore said in a client note.

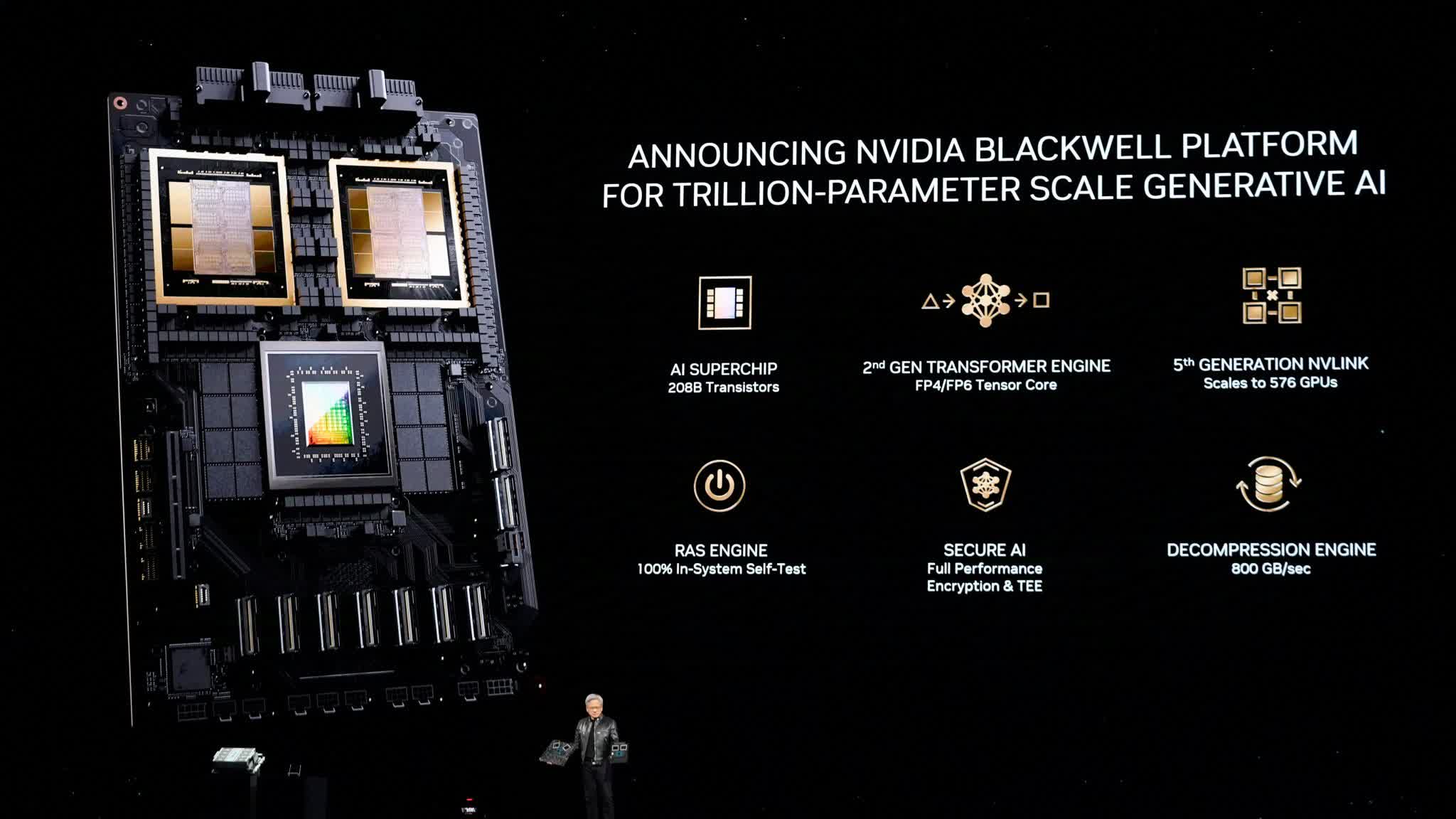

Nvidia unveiled the Blackwell GPU platform in March. It includes the B200 GPU and GB200 Grace "super chip." These processors can handle the demanding workloads of large language model (LLM) inference while significantly reducing energy consumption, a growing concern in the industry.

Nvidia has overcome initial packaging issues with its B100 and B200 GPUs, allowing the company to ramp up production. Both GPUs utilize TSMC's advanced CoWoS-L packaging technology. However, questions remain about whether TSMC has sufficient CoWoS-L capacity to meet the skyrocketing demand.

Another potential bottleneck in the production process is the supply of HBM3E memory, which is crucial for high-performance GPUs like Blackwell. Tom's Hardware pointed out that Nvidia is yet to qualify Samsung's HBM3E memory for its Blackwell GPUs, adding another layer of complexity to the supply chain.

In August, Nvidia acknowledged that its Blackwell-based products were experiencing low yields, necessitating a re-spin of some layers of the B200 processor to improve production efficiency. Despite these challenges, Nvidia remains confident in its ability to ramp up Blackwell production in Q4 2024. It expects to ship several billion dollars worth of Blackwell GPUs in the last quarter of this year.

Nvidia Blackwell GPUs sold out for the next 12 months as AI market boom continues