Rumor mill: The rumored specifications of Nvidia's next-generation consumer graphics cards have fluctuated for months but could be starting to solidify as their unveiling and release draw closer. The latest reports introduce slight changes to the expected memory configuration and physical size. The high-end and flagship products are still expected to launch before the end of 2024.

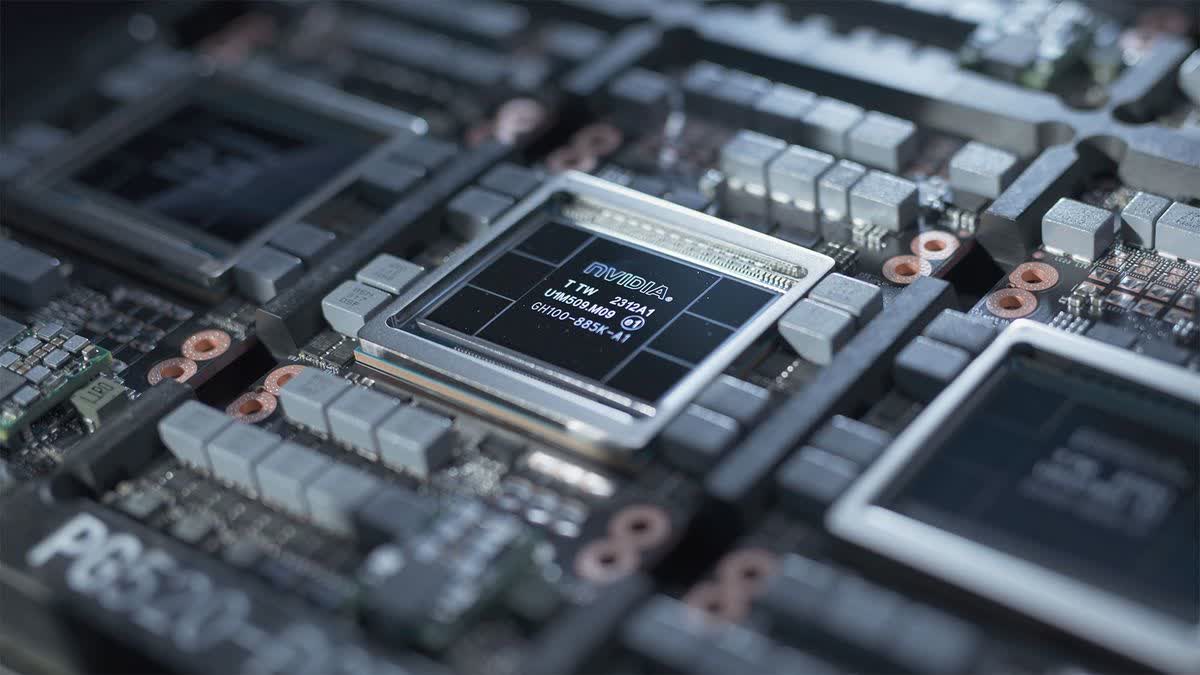

Nvidia tipster @kopite7kimi reports that Nvidia has taped out the GB202 and GB203 GPUs, expected to power the upcoming GeForce RTX 5090 and 5080 graphics cards. The flagship 5090 might not be as large as previously expected and could use a somewhat unusual VRAM configuration.

The RTX 4090 isn't just known for being the fastest consumer GPU – it's also ridiculously huge owing to the three-slot cooler used by Nvidia's Founders Edition. According to Kopite, its successor will return to a more normal-looking two-slot design with two fans.

and GB203.

– kopite7kimi (@kopite7kimi) May 29, 2024

Team Green's reasoning remains unclear, but the decision could indicate a significant shift regarding the 5090's wattage or shaders. The 4090 is a 450W GPU, and old leaks suggest that Nvidia previously tested a successor that could have been a 600W monster. Even if the company goes in the opposite direction, board partners could still introduce larger designs.

Meanwhile, Panzerlied – a known leaker on the Chiphell forums – claims that the GB202 incorporates a dense memory pattern with 16 modules and a 448-bit interface. This information counters prior reports indicating a 512-bit bus.

If the 5090 features 28GB of GDDR7 VRAM at 28Gbps as rumors have long suggested, then it might only utilize 14 of the GB202's memory modules. A 448-bit bus would theoretically give the flagship GPU around 1.5TB/s of memory bandwidth – a 50 percent increase over the 4090. Although the 5080 and 5070 are also expected to include GDDR7 RAM, they will likely feature only 256-bit and 192-bit interfaces.

Amid the recent reports, Kopite also reiterated prior information stating that the RTX 5000 cards, codenamed "Blackwell," will maintain monolithic designs. Rival AMD has been gradually moving toward chiplets with its CPUs and GPUs, but Nvidia has only utilized the format for its enterprise AI graphics processors.

Nvidia is rumored to be planning a late 2024 launch for the RTX 5090 and 5080, with the 5080 possibly shipping sooner. They might face new competition from AMD's RDNA 4 and Intel's Arc Battlemage.

Nvidia GeForce RTX 5090 tape-out rumors: smaller than the 4090, 448-bit bus, monolithic die