In context: Now that the crypto mining boom is over, Nvidia has yet to return to its previous gaming-centric focus. Instead, it has jumped into the AI boom, providing GPUs to power chatbots and AI services. It currently has a corner on the market, but a consortium of companies is looking to change that by designing an open communication standard for AI processors.

Some of the largest technology companies in the hardware and AI sectors have formed a consortium to create a new industry standard for GPU connectivity. The Ultra Accelerator Link (UALink) group aims to develop open technology solutions to benefit the entire AI ecosystem rather than relying on a single company like Nvidia and its proprietary NVLink technology.

The UALink group includes AMD, Broadcom, Cisco, Google, Hewlett Packard Enterprise (HPE), Intel, Meta, and Microsoft. According to its press release, the open industry standard developed by UALink will enable better performance and efficiency for AI servers, making GPUs and specialized AI accelerators communicate "more effectively."

Companies such as HPE, Intel, and Cisco will bring their "extensive" experience in creating large-scale AI solutions and high-performance computing systems to the group. As demand for AI computing continues rapidly growing, a robust, low-latency, scalable network that can efficiently share computing resources is crucial for future AI infrastructure.

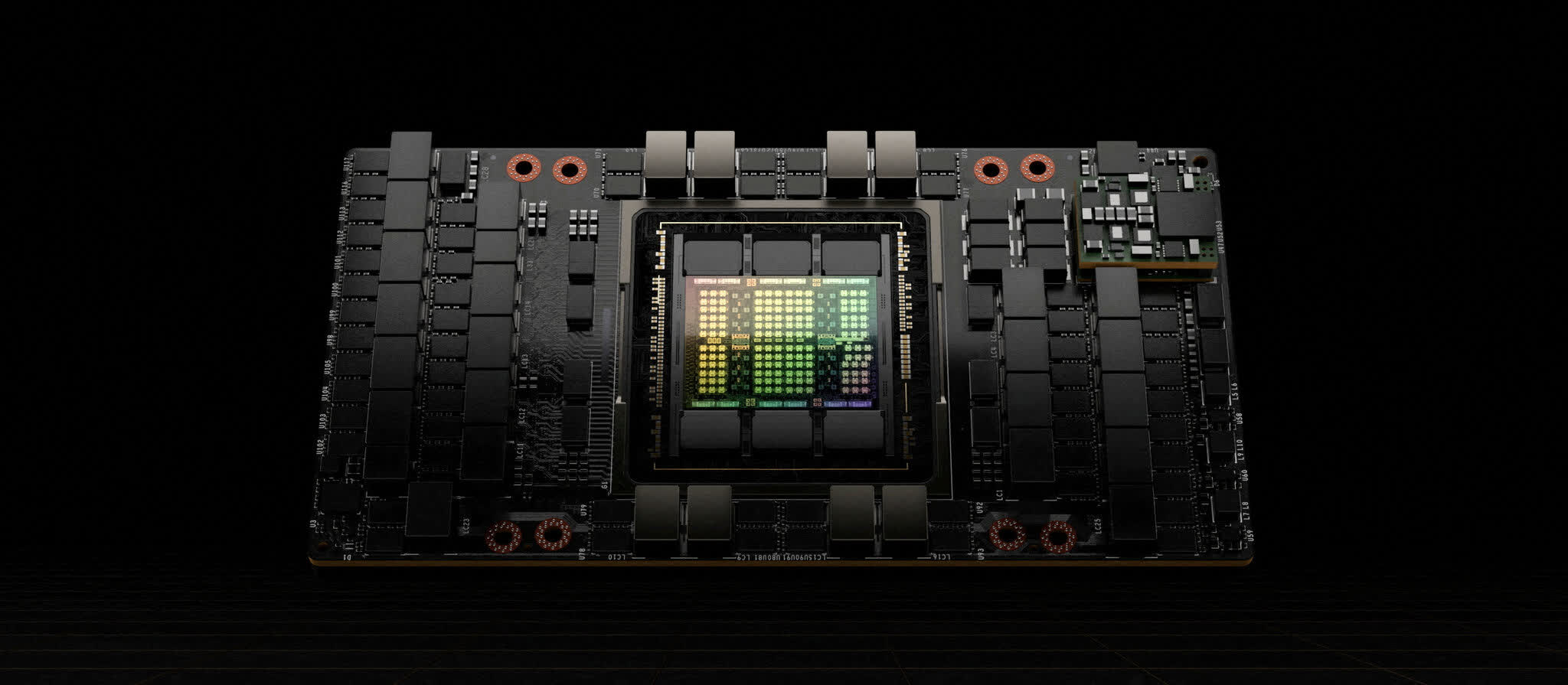

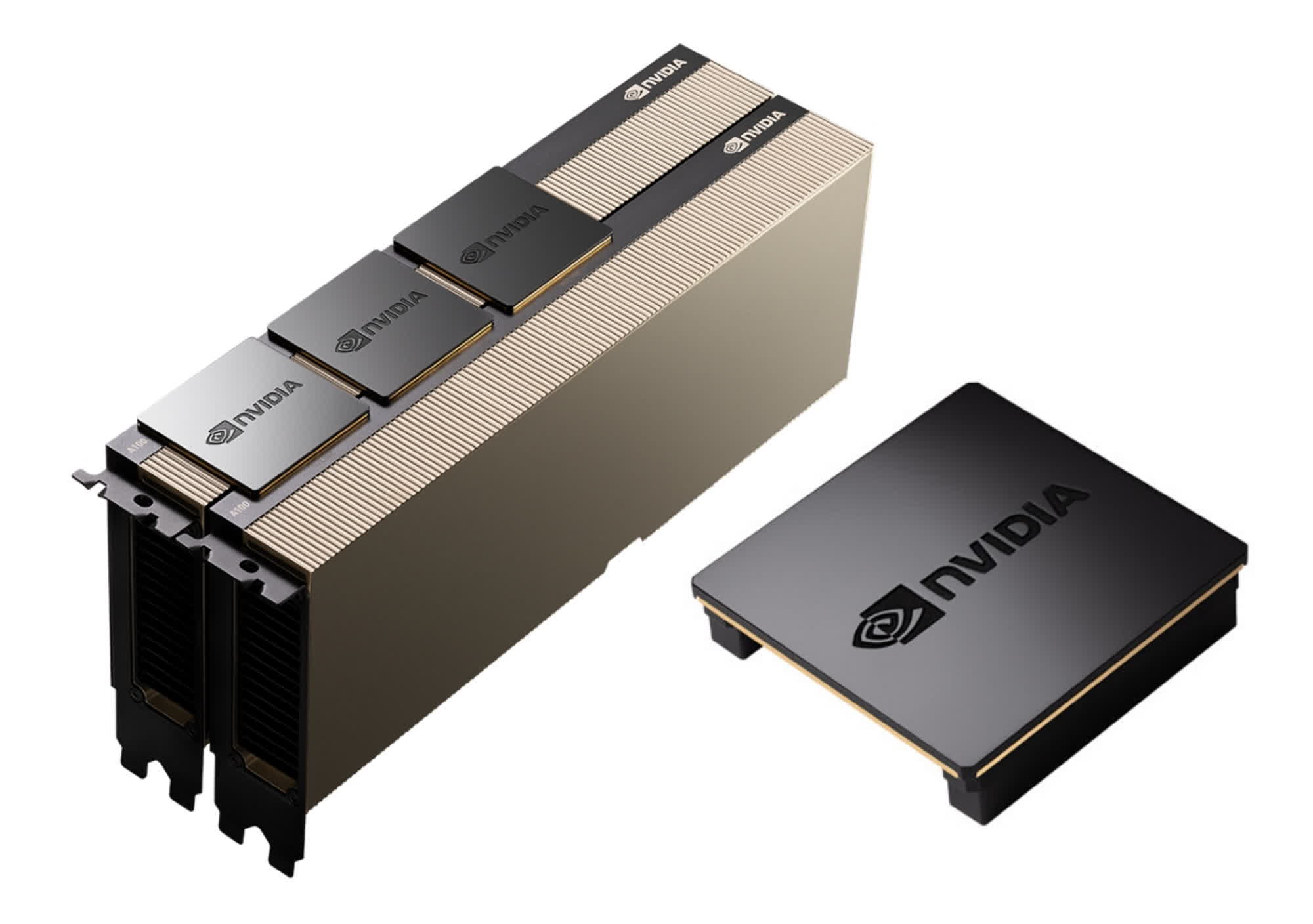

Currently, Nvidia provides the most powerful accelerators to power the largest AI models. Its NVLink technology helps facilitate the rapid data exchange between hundreds of GPUs installed in these AI server clusters. UALink hopes to define a standard interface for AI and machine learning, HPC, and cloud computing, with high-speed and low-latency communications for all brands of AI accelerators, not just Nvidia's.

The group expects an initial 1.0 specification to land during the third quarter of 2024. The standard will enable communications for 1,024 accelerators within an "AI computing pod," allowing GPUs to access loads and stores between their attached memory elements directly.

AMD VP Forrest Norrod noted that the work the UALink group is doing is essential for the future of AI applications. Likewise, Broadcom said it was "proud" to be a founding member of the UALink consortium to support an open ecosystem for AI connectivity.