When people unfamiliar with AI envision artificial intelligence, they may imagine Will Smith’s blockbuster I, Robot, the sci-fi thriller Ex Machina, or the Disney movie Smart House — nightmarish scenarios where intelligent robots take over to the doom of their human counterparts.

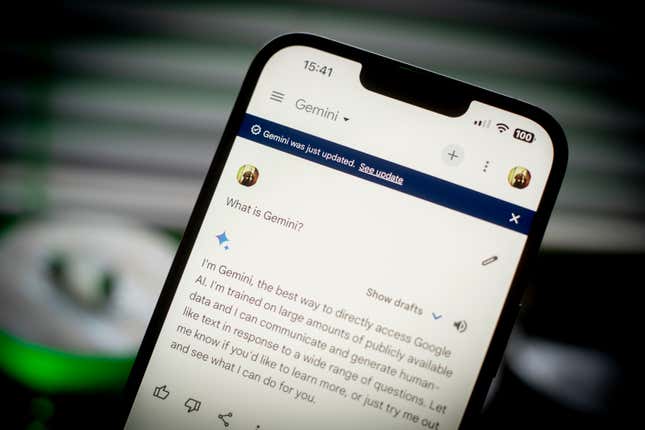

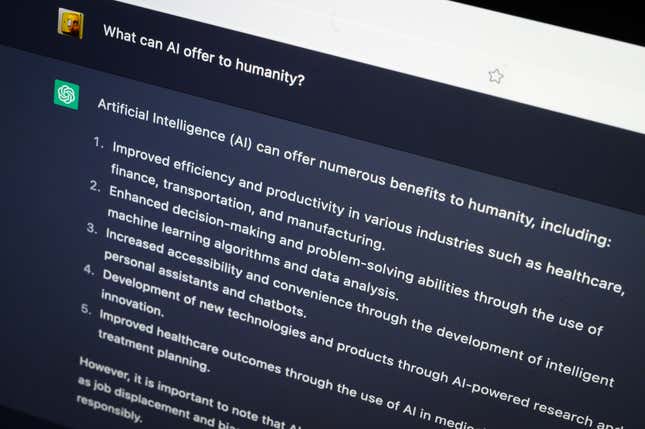

Today’s generative AI technologies aren’t quite all-powerful yet. Sure, they may be capable of sowing disinformation to disrupt elections or sharing trade secrets. But the tech is still in its early stages, and chatbots are still making big mistakes.

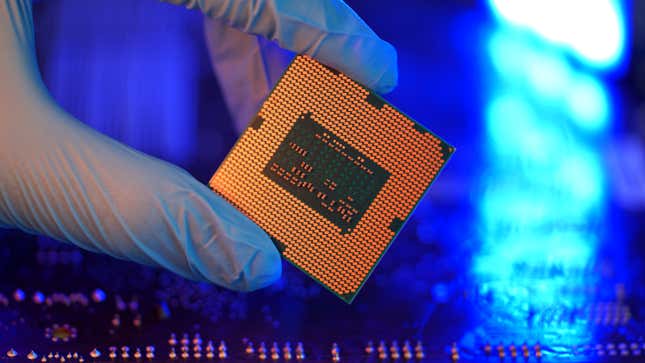

Still, the newness of the technology is also bringing new terms into play. What makes a semiconductor, anyway? How is generative AI different from all the other kinds of artificial intelligence? And should you really know the nuances between a GPU, a CPU, and a TPU?

If you’re looking to keep up with the new jargon the sector is slinging around, Quartz has your guide to its core terms.