Forward-looking: Power efficiency is the name of the game these days, and every chip designer strives to squeeze more performance out of less energy. A secretive startup called Efficient Computer Corp. claims to have taken that to a whole new level with their newly announced processor architecture.

Efficient Computer emerged from stealth mode this week, revealing their "Fabric" chip design. According to the company, this novel CPU can provide performance on par with current offerings, while using a tiny fraction of the electricity. They claim to be 100 times more efficient than "the best embedded von Neumann processor," and 1,000 times more economical than power-intensive GPUs. If true, those would be mind-blowing improvements.

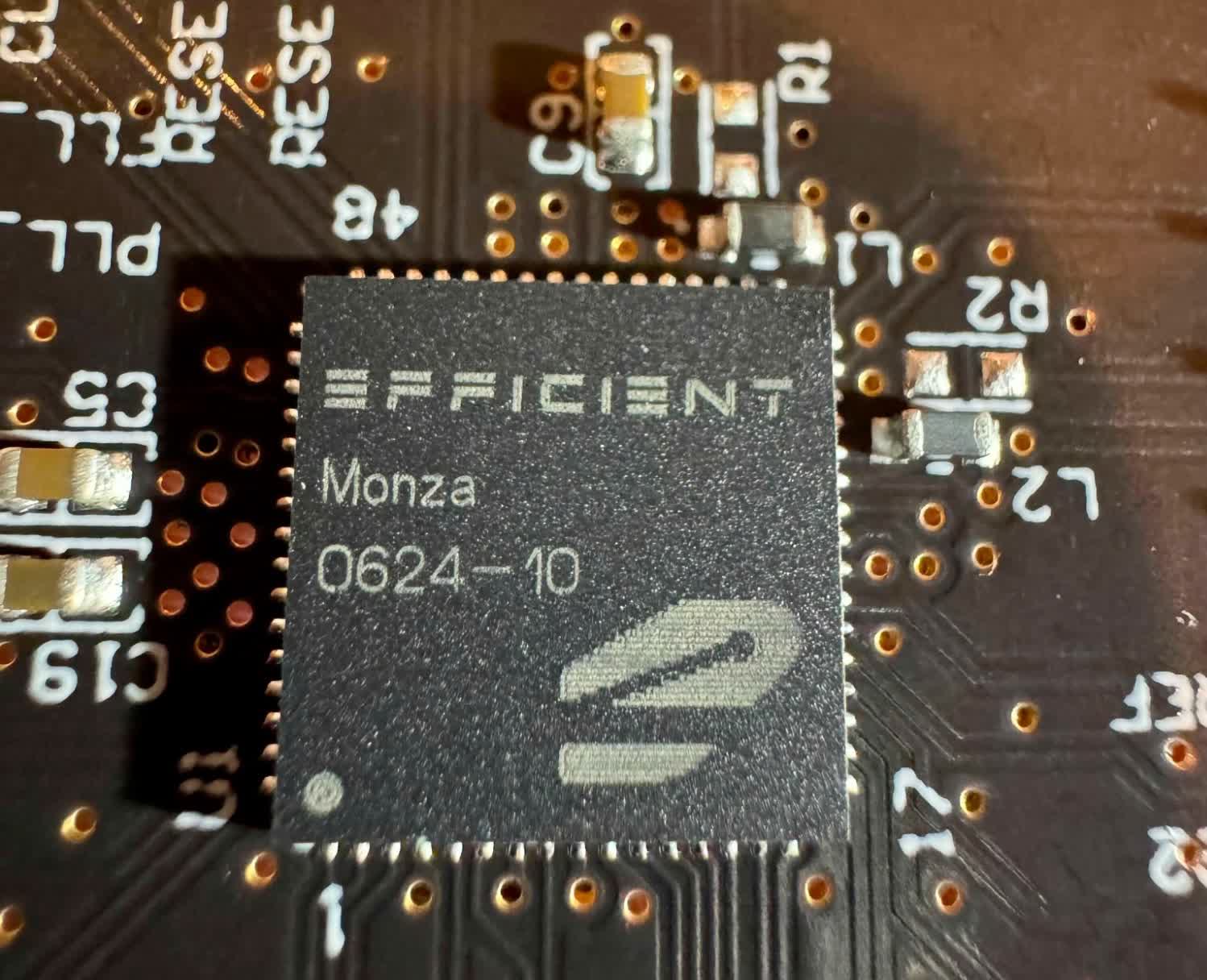

In a press release, Efficient Computer announced that it has already implemented the Fabric processor architecture in its 'Monza' test system on a chip (SoC).

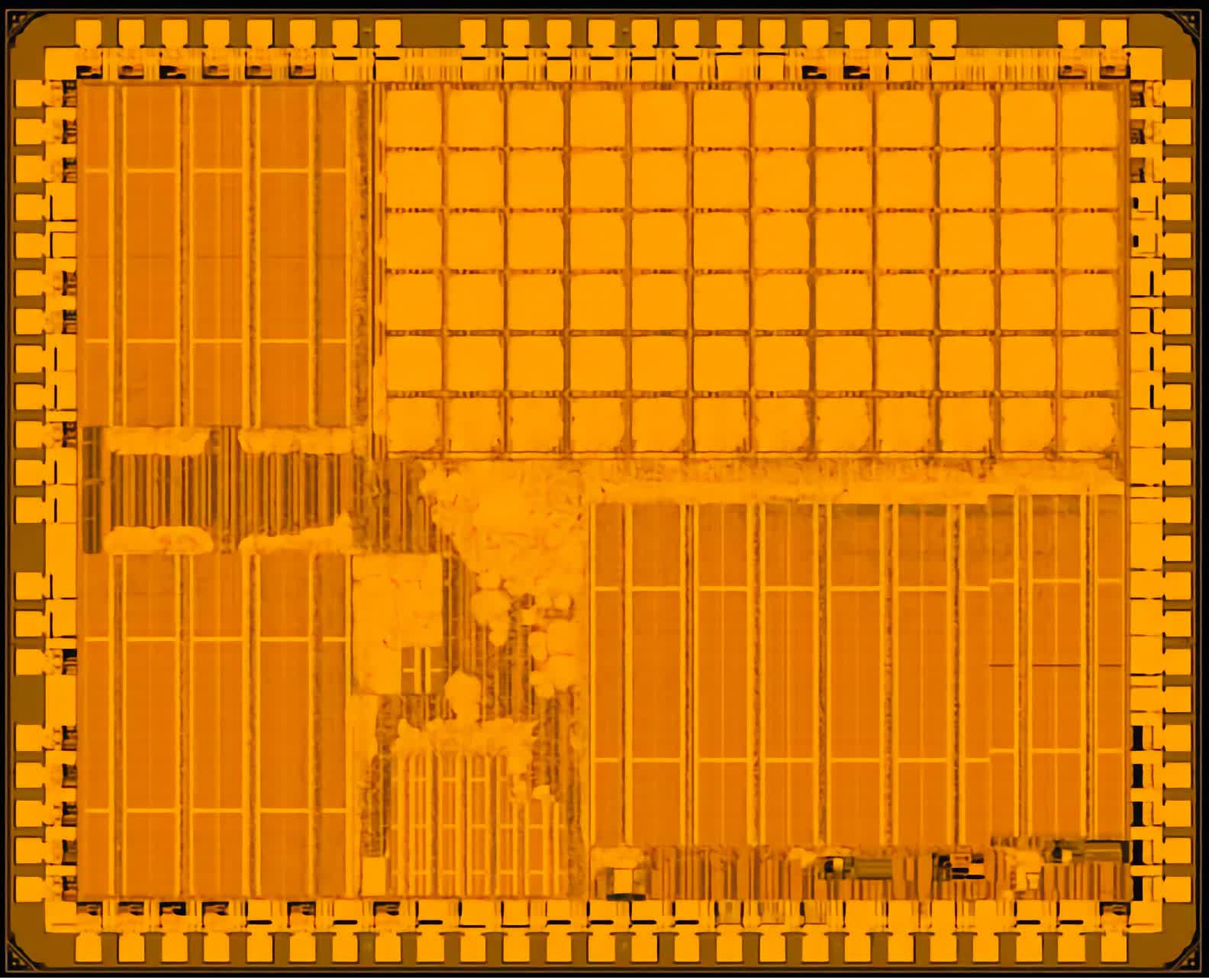

So what's their secret sauce? The details get pretty technical, but the key idea behind Fabric is optimizing for parallelism from the ground up. General-purpose CPUs are incredibly complex, carrying around tons of legacy cruft to maintain backwards compatibility. Efficient Computer strips all that away and uses a simplified, reconfigurable dataflow architecture to execute code across numerous parallel computing elements.

The innovative design stems from over seven years of research at Carnegie Mellon University. It exploits spatial parallelism by executing different instructions simultaneously across the physical layout of the chip. An efficient on-chip network links these parallel computing elements.

Efficient's software stack also supports major embedded languages such as C, C++, TensorFlow, and some Rust applications, enabling app developers to quickly recompile their code for the Fabric architecture. The startup indicates that Fabric's compiler was "designed alongside the hardware from day one."

Nevertheless, this need for software recompilation could limit mainstream adoption. Recompiling every application is challenging for more conventional consumer devices. So, at least initially, the target markets are specialized sectors such as health devices, civil infrastructure monitoring, satellites, defense, and security – areas where the power advantages would be most valuable.

Under the hood, Fabric presents a unique approach to parallel computing. While Efficient Computer is tight-lipped about the specifics of the processing architecture, their descriptions hint at a flexible, reconfigurable processor that can optimize itself for different workloads through software-defined instructions.

The company recently locked down $16 million in seed funding from Eclipse Ventures, likely aimed at launching their first Fabric chips. Efficient Computer reports having already inked deals with unnamed partners and are targeting production silicon shipping in early 2025.

https://www.techspot.com/news/102201-startup-claims-100x-more-efficient-processor-than-current.html