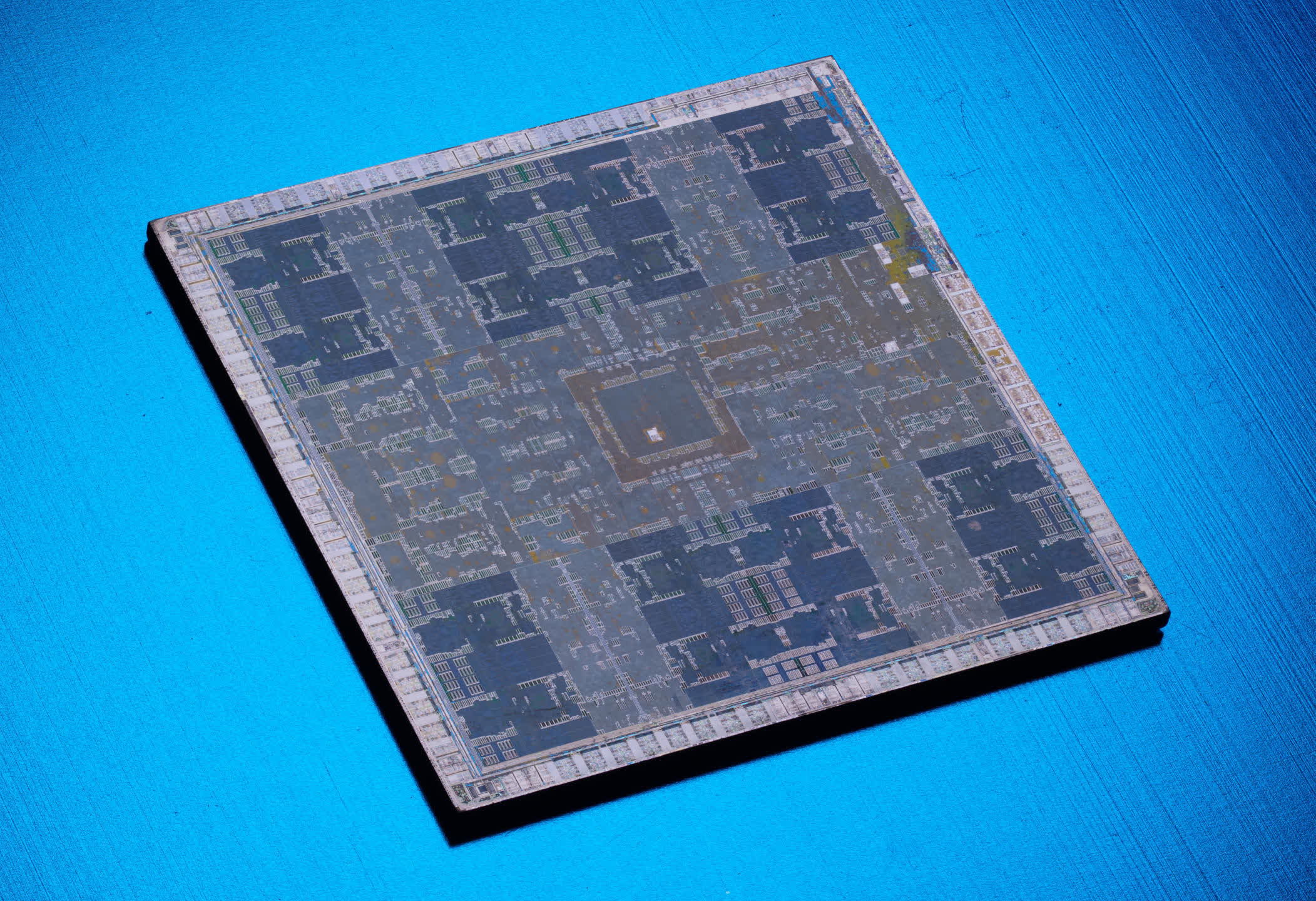

Gone are the days when the sole function for a graphics chip were, graphics. Let's explore how the GPU evolved from a modest pixel pusher into a blazing powerhouse of floating-point computation.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Goodbye to Graphics: How GPUs Came to Dominate Compute and AI

- Thread starter neeyik

- Start date

m3tavision

Posts: 1,420 +1,196

This is a great article...!

The first video-card* I ever bought, was called a Video-Toaster for my Amiga4000.

The first video-card* I ever bought, was called a Video-Toaster for my Amiga4000.

Vanderlinde

Posts: 412 +266

takaozo

Posts: 923 +1,456

Thanks for the content it was an interesting reading on this hot summer day.

Actually, even with the first GPUs, even with the fixed pipelines, you could do some GPGPU, but you had to mask and simulate the data as textures and/or vertex. One improvement was with early GPUs that could write to a texture, which speeded up execution as it avoid muhc download-modify-upload data to the GPU memory. Then the general shaders would arrive, which already allowed more general computing.

Currently, I don't know why many "GPUs" used in servers are called that, since few or none have any accelerated graphics capacity, say ROPs, geometry processors or other units. I would purely call them accelerators.

Currently, I don't know why many "GPUs" used in servers are called that, since few or none have any accelerated graphics capacity, say ROPs, geometry processors or other units. I would purely call them accelerators.

Last edited:

P

punctualturn

Great article.

Its refreshing to read a balanced article instead of the typical ones that seems to come directly from Ngreedias Marketing Dept.

Except for this part:

One metric used is deliberately producing lower numbers for ....reasons.. but anyways.

Its refreshing to read a balanced article instead of the typical ones that seems to come directly from Ngreedias Marketing Dept.

Except for this part:

AMD's CDNA 2-powered MI250X offers just under 48 TFLOPS of FP64 throughput and 128 GB of High Bandwidth Memory (HBM2e), whereas Nvidia's GH100 chip, using its Hopper architecture, uses its 80 billion transistors to provide up to 4000 TFLOPS of INT8 tensor calculations.

One metric used is deliberately producing lower numbers for ....reasons.. but anyways.

The figures were chosen simply to show how the MI250X, GH100, and Ponte Vecchio have specifications that are far beyond anything one would normally experience in an everyday computer.One metric used is deliberately producing lower numbers for ....reasons.. but anyways.

P

punctualturn

So are you telling me that FP64 numbers are the same as INT8?The figures were chosen simply to show how the MI250X, GH100, and Ponte Vecchio have specifications that are far beyond anything one would normally experience in an everyday computer.

But yes, I know these parts are monsters at their respective workloads.

AdamNovagen

Posts: 42 +52

Fair enough, and I don't think this was some kind of deliberate dig at AMD, but the use of the word "whereas" in the original sentence implies it's a direct comparison of "this one does this much, but this other one does THIS much" when in reality FP64 is worlds apart from INT8 as a form of workload. I think a full stop or semicolon followed by a "meanwhile" for the nVidia and Intel mentions would have been better, since that reads more like a non-comparative statement of each of the three architectures' outputs in their intended niche.The figures were chosen simply to show how the MI250X, GH100, and Ponte Vecchio have specifications that are far beyond anything one would normally experience in an everyday computer.

Thanks for the feedback. I've updated the text slightly.I think a full stop or semicolon followed by a "meanwhile" for the nVidia and Intel mentions would have been better, since that reads more like a non-comparative statement of each of the three architectures' outputs in their intended niche.

Mr Majestyk

Posts: 2,041 +1,883

For mathematical simulations such as in fluid dynamics and electromagnetics for example, fp64 (commonly known as double precision) is a must, even fp32 will not cut the mustard and I can say even 20 years ago we were working on electromagnetic optical fibre simulations where fp64 was barely adequate for the accuracy we needed. fp128 would have been nice.

In the past the fp64 performance of the gpu's has been poor, are we at the stage yet where fp64 is no worse than 0.5x fp32 yet for the high end products like Mi300, H100, Ponte Vecchio etc?

In the past the fp64 performance of the gpu's has been poor, are we at the stage yet where fp64 is no worse than 0.5x fp32 yet for the high end products like Mi300, H100, Ponte Vecchio etc?

I wonder if there will be an AI that would actually work much better on CPUs. Could something take advantage if doing different things and be more helpful than the things current AI apps doObvious. GPU's are excellent at one thing, where a X86 or X64 CPU is good at many things. When you can use all that computational power in a different way, GPU's will excel even the best CPU's.

FaTaL

Posts: 254 +363

Because GPUs do parallel processing and CPUs don't. Best analogy I read is if you took a book and gave it to a CPU, it would read each page individually.Fast forward to today, and the GPU has become one of the most dominant chips in the industry.

A GPU will take all the pages from the book and read them at the same time.

I don't know alot about CPU architecture , but I'm wondering what are the limitations that prevent chip design to do full on parallel processing like GPUs. My guess is has something to do with binary ?

Actually, general purpose computing was already done with the first GPUs. Of course, in a very rudimentary and convoluted way. It was necessary to use textures and vertex arrays as matrices, vectors and data sets. do render-to-texture and read pixels from the framebuffer to get results. use graphical operations from the fixed pipeline to do computation. then the shaders came to so it was a little easier

Last edited:

A decent read for nostalgia, but I would have liked to have seen some more detail on the section about 3DFX Voodoo. The story mentions the Voodoo5, but by then, 3dfx was about to be exited from the market as Nvidia got their act together. Voodoo 1 and 2 were kings and that was the peak for them. Voodoo 3 had some time but then it was downhill.

I was a local business computer tech around those times and some fellow techs were always blowing all their money to buy the latest gear to game with and show off. I had a voodoo 1 though. When the 3DFX Voodoo 1 3D Accelerator hit, there was really no competition (surely not at a price point for consumers). Playing Quake suddenly was amazing with it even at only 640x480. Before that was just some 2d cards with chipsets from nvidia vs ATI on Vendors like Diamond and Matrox had their own chips etc.

It wasn't really until around after Voodoo2 was out dominating with 800x600 (or you could run two in SLI at 1024x768) that nVidia, after their NV3 Riva 128 and ATI's Rage fell short, released new Quake 2 drivers for their new NV4 Riva TNT graphics cards that fixed their framerates and hit close enough in 3D acceleration to compete with 3DFX (in a native 2D/3D graphics card, without a secondary accelerator add-in card) and their ascension began.

It's a nice story, because the drama had 3DFX emerge out of nowhere just about over all of the big graphics companies at that time, and they had the lead dominating, and then nVidia bounced back to then later move ahead of Voodoo3 with their nvidia TNT2 and aside from some ATI, later AMD competition, NVidia has been hard to knock out.

I was a local business computer tech around those times and some fellow techs were always blowing all their money to buy the latest gear to game with and show off. I had a voodoo 1 though. When the 3DFX Voodoo 1 3D Accelerator hit, there was really no competition (surely not at a price point for consumers). Playing Quake suddenly was amazing with it even at only 640x480. Before that was just some 2d cards with chipsets from nvidia vs ATI on Vendors like Diamond and Matrox had their own chips etc.

It wasn't really until around after Voodoo2 was out dominating with 800x600 (or you could run two in SLI at 1024x768) that nVidia, after their NV3 Riva 128 and ATI's Rage fell short, released new Quake 2 drivers for their new NV4 Riva TNT graphics cards that fixed their framerates and hit close enough in 3D acceleration to compete with 3DFX (in a native 2D/3D graphics card, without a secondary accelerator add-in card) and their ascension began.

It's a nice story, because the drama had 3DFX emerge out of nowhere just about over all of the big graphics companies at that time, and they had the lead dominating, and then nVidia bounced back to then later move ahead of Voodoo3 with their nvidia TNT2 and aside from some ATI, later AMD competition, NVidia has been hard to knock out.

Endymio

Posts: 2,354 +2,438

It's difficult to tell if your choice of sobriquet is the usual hypocritically inchoate anti-capitalist rant, or simply an assault on NVidia for besting AMD in the marketplace. Could you clarify?Its refreshing to read a balanced article instead of the typical ones that seems to come directly from Ngreedias Marketing Dept.

CPUs do parallel processing as well, and -- in extremely general terms -- in the same manner as GPUs: by adding more cores. But while a CPU may have 6, 8, or even 32 cores, the GPU in the 4090 has 16,384 cores.I don't know alot about CPU architecture , but I'm wondering what are the limitations that prevent chip design to do full on parallel processing like GPUs. My guess is has something to do with binary ?

Somewhat more specifically, the architectural reason that GPUs can pack in so many more cores is that they use a so-called SIMD parallel model, where a single instruction is carried out on multiple items of data at once. CPUs use MIMD parallelism, where each core executes fully independently of the others.

You are looking for this:A decent read for nostalgia, but I would have liked to have seen some more detail on the section about 3DFX Voodoo. The story mentions the Voodoo5, but by then, 3dfx was about to be exited from the market as Nvidia got their act together. Voodoo 1 and 2 were kings and that was the peak for them. Voodoo 3 had some time but then it was downhill.

3Dfx Interactive: Gone But Not Forgotten

3Dfx's Voodoo and Voodoo2 graphics are widely credited with jump-starting 3D gaming and revolutionizing computer graphics nearly overnight. The 3D landscape in 1996 favored S3 Graphics with...

www.techspot.com

www.techspot.com Similar threads

- Replies

- 37

- Views

- 413

- Replies

- 11

- Views

- 417

- Replies

- 33

- Views

- 715

Latest posts

- Goodbye to Graphics: How GPUs Came to Dominate Compute and AI

- kinetix replied

- Eco-friendly "water batteries" are cheaper and safer than lithium-ions

- GeforcerFX replied

- Biden backs bill that could kill TikTok in the US, TikTok fights back

- holydiver replied

- TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.