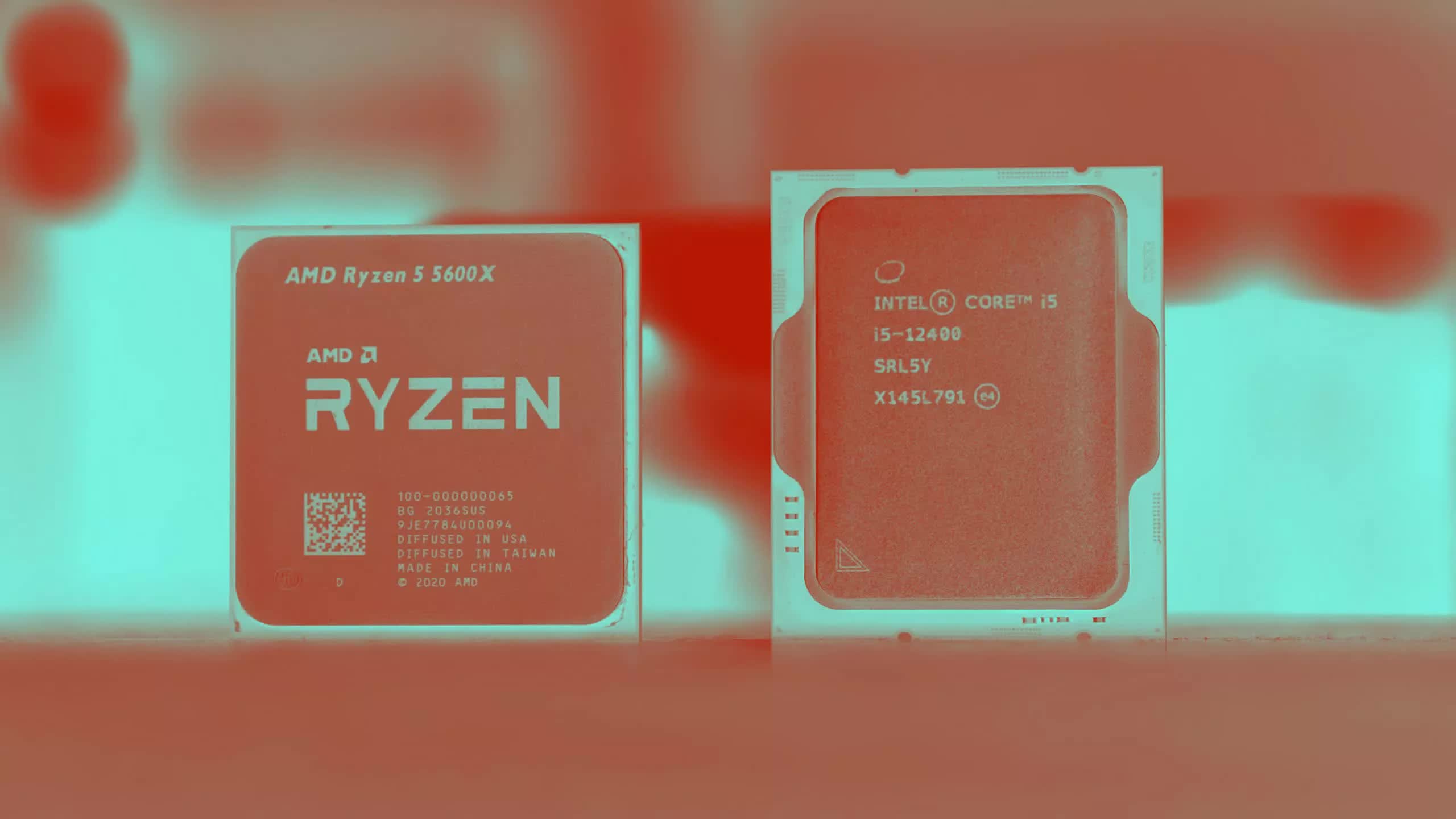

In this article, we'll take a look at how L3 cache capacity affects gaming performance. More specifically, we'll be examining AMD's Zen 3-based Ryzen processors in a "for science" type of feature.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

CPU Cache vs. Cores: AMD Ryzen Edition

- Thread starter Steve

- Start date

WhiteLeaff

Posts: 379 +672

It would be more useful if the clockrate was leveled.

I don't understand the reason for limiting yourself to bringing data that we already know.

I don't understand the reason for limiting yourself to bringing data that we already know.

gamerk2

Posts: 927 +923

As I noted years ago: There's a point where adding more cores is no longer going to improve performance simply due to the impossibility of making the code more parallel. And it's not shocking at all the majority of titles start hitting that wall in the 6-8 core range. After that you're chasing incremental gains (and can even see minimal loss due to cache coherency and scheduling concerns).

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

WhiteLeaff

Posts: 379 +672

More cores help to compile shaders quickly thoAs I noted years ago: There's a point where adding more cores is no longer going to improve performance simply due to the impossibility of making the code more parallel. And it's not shocking at all the majority of titles start hitting that wall in the 6-8 core range. After that you're chasing incremental gains (and can even see minimal loss due to cache coherency and scheduling concerns).

So yeah, these new 12x2 core CPUs that come out at the high end are great...but you really don't need them then a basic 8-core with a ton of cache will run circles around them in the majority of tasks.

Very interesting! I was thinking of going for a 5800X3D from my 5600X but given this and the 5600X3D review, I will go for the 5600X3D... next time I happen to drive by a Micro Center.

Thank you Steve

Thank you Steve

bluetooth fairy

Posts: 232 +161

Very interesting! I was thinking of going for a 5800X3D from my 5600X but given this and the 5600X3D review, I will go for the 5600X3D... next time I happen to drive by a Micro Center.

Thank you Steve

Excuse me, but what GPU are you using with the 5600X, and what games are you usually playing?

bluetooth fairy

Posts: 232 +161

As a side note: if it's 6c/12t and 8c/16t, it would be better to show that on the diagrams. Because, 6c/6t != 6c/12t in gaming. Especially considering AMD SMT implementation. Thats probably why 12t is enough for gaming still.

--------

PS 6c/12t were comparable to 8c/8t, as far as I can recall. But it's the story for another article, I guess.

--------

PS 6c/12t were comparable to 8c/8t, as far as I can recall. But it's the story for another article, I guess.

Last edited:

MarcusNumb

Posts: 148 +283

Another point to show that AMD is doing a good job with their CPUs, hopefully one day they can do that well with their GPUs.

I am using a 4070 Ti - I play mostly action games but BG3 lately. Cyberpunk.... it's kind of a mess with this setup. I am hoping the new CPU will help with stuttering/1% lows. But knowing me, I'll get obsessed with some 2d game and then it won't even matter haha, Heat Signature will be the death of me.Excuse me, but what GPU are you using with the 5600X, and what games are you usually playing?

So let's downgrade our monitors to 1080p to put our 4090's to good use on our EOL systems

I'm just kidding. I know why this is done this way. At least the 720p benchmarks are gone with the 4090. Still, those tests seem to produce interesting results until they meet with reality, where Intel 10th gen Comet Lake and AMD Zen3 rigs are rarely matched with the highest rank GPU.

And even if you do, the natural habitat for a high cost RTX 4090 is a high cost 4k/5k monitor and maybe, just maybe high hz WQHD (but then you most likely overpaid on your GPU).

Anyway, if you bench for reality, those differences become narrow and boring very fast. So for me this is not an interesting test. Your mileage may vary.

I'm just kidding. I know why this is done this way. At least the 720p benchmarks are gone with the 4090. Still, those tests seem to produce interesting results until they meet with reality, where Intel 10th gen Comet Lake and AMD Zen3 rigs are rarely matched with the highest rank GPU.

And even if you do, the natural habitat for a high cost RTX 4090 is a high cost 4k/5k monitor and maybe, just maybe high hz WQHD (but then you most likely overpaid on your GPU).

Anyway, if you bench for reality, those differences become narrow and boring very fast. So for me this is not an interesting test. Your mileage may vary.

ScottSoapbox

Posts: 957 +1,690

Nice story bro.So let's downgrade our monitors to 1080p to put our 4090's to good use on our EOL systems

I'm just kidding. I know why this is done this way. At least the 720p benchmarks are gone with the 4090. Still, those tests seem to produce interesting results until they meet with reality, where Intel 10th gen Comet Lake and AMD Zen3 rigs are rarely matched with the highest rank GPU.

And even if you do, the natural habitat for a high cost RTX 4090 is a high cost 4k/5k monitor and maybe, just maybe high hz WQHD (but then you most likely overpaid on your GPU).

Anyway, if you bench for reality, those differences become narrow and boring very fast. So for me this is not an interesting test. Your mileage may vary.

I found this test very interesting.

Similar threads

- Replies

- 38

- Views

- 552

- Replies

- 28

- Views

- 442

- Locked

- Replies

- 60

- Views

- 1K

Latest posts

- CPU Cache vs. Cores: AMD Ryzen Edition

- ScottSoapbox replied

- The US Department of Defense is using ML algorithms to find airstrike targets

- ScottSoapbox replied

- Tumblr and WordPress blogs will be exploited for AI model training

- Vanderlinde replied

- Apple shuts down its secretive electric car project

- toooooot replied

- Threads appears to be winning the Twitter succession war

- Sir Sparkles replied

- GameScent uses audio cues and AI to bring smells to video games

- VaRmeNsI replied

- TechSpot is dedicated to computer enthusiasts and power users.

Ask a question and give support.

Join the community here, it only takes a minute.