Forward-looking: New research details a process that allows a CPU, GPU, and AI accelerator to work seamlessly in parallel on separate tasks. The pioneering breakthrough could provide blazing-fast, energy-efficient computing – promising to double overall processing speed at less than half the energy cost.

Researchers at the University of California Riverside have developed a technique called Simultaneous and Heterogeneous Multithreading (SHMT), which builds on contemporary simultaneous multithreading. Simultaneous multithreading splits a CPU core into numerous threads, but SHMT goes further by incorporating the graphics and AI processors.

The key benefit of SHMT is that these components can simultaneously crunch away on entirely different workloads, optimized to each one's strength. The method differs from traditional computing, where the CPU, GPU, and AI accelerator work independently. This separation requires data transfer between the components, which can lead to bottlenecks.

Meanwhile, SHMT uses what the researchers call a "smart quality-aware work-stealing (QAWS) scheduler" to manage the heterogeneous workload dynamically between components. This part of the process aims to balance performance and precision by assigning tasks requiring high accuracy to the CPU rather than the more error-prone AI accelerator, among other things. Additionally, the scheduler can seamlessly reassign jobs to the other processors in real time if one component falls behind.

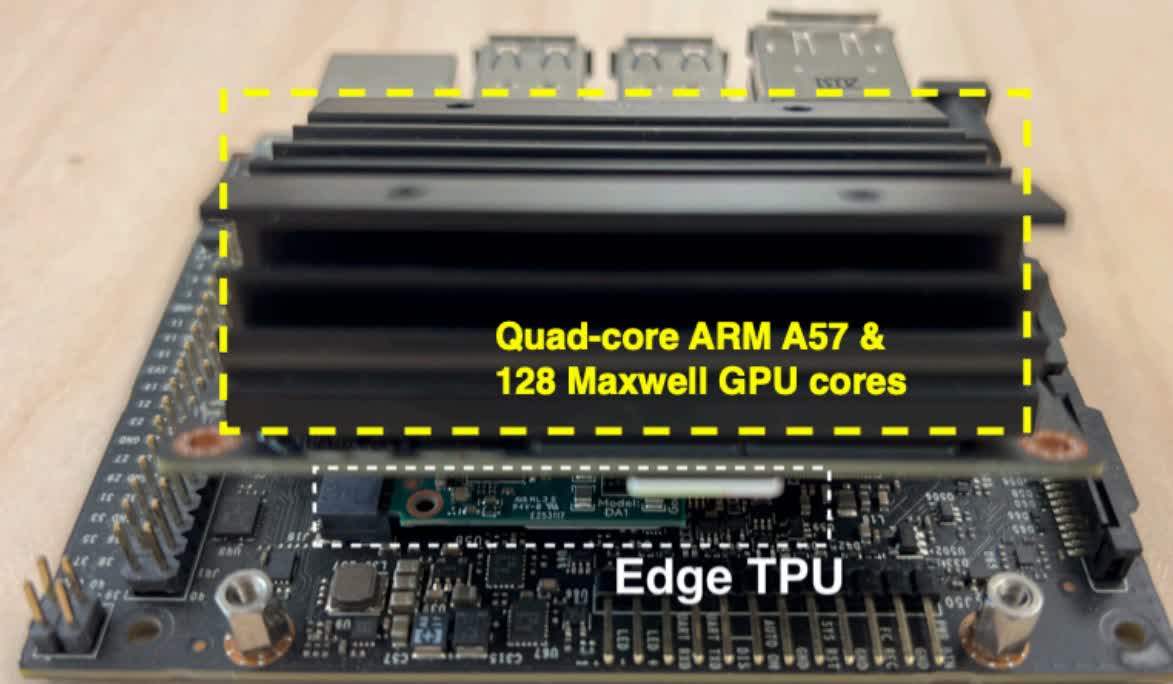

In testing, SHMT boosted performance by 95 percent and sliced power usage by 51 percent compared to existing techniques. The result is an impressive 4x efficiency uplift. Early proof-of-concept trials utilized Nvidia's Jetson Nano board containing a 64-bit quad-core Arm CPU, 128-core Maxwell GPU, 4GB RAM, and an M.2 slot housing one of Google's Edge TPU AI accelerators. While it's not precisely bleeding-edge hardware, it does mirror standard configurations. Unfortunately, there are some fundamental limitations.

"The limitation of SHMT is not the model itself but more on whether the programmer can revisit the algorithm to exhibit the type of parallelism that makes SHMT easy to exploit," the paper explains.

In other words, it's not a simple universal hardware implementation that any developer can use. Programmers have to learn how to do it or develop tools to do it for them.

If the past is any indication, this is no easy feat. Remember Apple's switch from Intel to Arm-based silicon in Macs? The company had to invest significantly in its developer toolchain to make it easier for devs to adapt their apps to the new architecture. Unless there's a concerted effort from big tech and developers, SHMT could end up a distant dream.

The benefits also depend heavily on problem size. While the peak 95-percent uplift required maximum problem sizes in testing, smaller loads saw diminishing returns. Tiny loads offered almost no gain since there was less opportunity to spread parallel tasks. Nonetheless, if this technology can scale and catch on, the implications could be massive – from slashing data center costs and emissions to curbing freshwater usage for cooling.

Many unanswered questions remain concerning real-world implementations, hardware support, code optimizations, and ideal use-case applications. However, the research does sound promising, given the explosion in generative AI apps over the past couple of years and the sheer amount of processing power it takes to run them.