The Best Places To Invest In The Self-Driving Car Industry

Summary

- The article discusses the self-driving car industry, focusing on Tesla, Inc.'s strategic missteps and its competitors, including Waymo, Cruise, Baidu's Apollo, and Mobileye.

- It delves into the computational algorithms behind self-driving cars, the challenges of reinforcement learning, and the current levels of driving automation among major players.

- The article concludes that Baidu may be the best investment option for those looking to bet on the self-driving business, as Tesla's technology lags behind its competitors.

gremlin

As a data scientist, I believe my value added is analyzing companies riding the wave of AI and providing color on the actual underlying tech. As someone who also understands fundamental analysis, I hope to marry these two skills and allow you to understand companies from a different investment perspective compared to what else you read in Seeking Alpha. Feel free to look at my profile to see my other deep dives.

I would like to talk about self-driving cars and especially Tesla, Inc. (TSLA). First, I want to analyze the industry from an algorithmic perspective. By that I mean, how the machine learning algorithms used for self-driving cars affect the economics of the automobile industry. A central issue is that TSLA has kneecapped its self-driving capabilities due to a series of design choices and they are in a disadvantaged position compared to competitors like Waymo (GOOG), Cruise (GM), Baidu's (BIDU) Apollo, and Mobileye (MBLY).

From a business perspective-ignoring valuation-MBLY seems to be the best positioned. Attached to a terrible business in GM, Cruise's value may be hidden from investors and GM is a good way to invest in a self-driving business if you can stomach the nightmarish cyclicality of an auto business. BIDUs Apollo may be an international play that is arguably as cheap as GM but attached to a safer business. The caveat is that you trade business cycle risk for geopolitical risk.

In total, I hope you walk away from this piece with a more holistic understanding of the self-driving industry, with a special focus on how the math affects the business.

Industry Background

The canonical place to start is the 2004 DARPA driverless challenge. This was a race with a $1 million prize funded by the Pentagon DARPA unit for the team that completed the 142 mile Mojave desert course the fastest. No car won. In fact, the Carnegie Mellon team that traveled the furthest covered a modest 7.3 miles. Since then, the technology has proceeded in fits and starts, but now, almost 20 years later, we are getting close to fully autonomous self-driving cars.

A huge advantage we have 20 years later is the development of machine-learning technologies that can learn the rules of driving unsupervised-practicing on the open road. This is a huge advantage. Much of the work that was done in the DARPA times was to think of all the rules that a car needs to obey (not just traffic laws, but simple things like how far to turn the wheel on a turn) and write that in code for the car to follow. However, driving is one of those things that seem perfect (although this will change) for machine learning because, as was apocryphally said about pornography, we know a good driver when we see it, but we can't really explain what makes that driver good. In this domain, writing down rules is not scalable because there are too many edge cases.

In fact, despite machine learning allowing programs to program themselves, it seems like there are almost too many edge cases for even 100 million miles of driving experience. Even if this problem is too hard for even modest to large amounts of data, engineers would move towards using machine learning to learn how to drive efficiently. At the same time, teams recognized there was no substitute for collecting millions and millions of miles of self-driving data, allowing the software to learn from experience how to be a good driver.

The Computational Algorithms Behind Self-Driving Cars

There are two strategies for designing self-driving cars: reinforcement learning (RL) and control theory. In 2004, control theory along with a rule-based driving system was the only option. The idea behind control theory is that there is a desired state the car is in-i.e., on the right-hand side of the road driving forward. Then as the car deviates from the state-drifting left-the system takes in the updated deviation from the "optimal" state and chooses an action to correct the feedback. The state can be quite complicated, involving the current location, the change in location (i.e., a derivative term), the sum of distance driven (integral term), images of the front, back, left, and right, radar analysis, and much more. So it's not a simple approach, but the floor of performance is higher given its comparative ease of use compared to reinforcement learning.

There are still companies building self-driving vehicles using control theory, and Boston Dynamics uses control theory to program their now omnipresent dog robots. So control is still a viable alternative to reinforcement learning in robotic automation, but the industry consensus especially among the companies with the most advanced self-driving software is that RL's higher ceiling makes it a better tool.

I'm not certain when RL became viewed as a viable way to program self-driving cars, but NeurIPS 2016 had a conference session on using RL for transportation for the first time. The idea behind RL comes out of the same term in psychology. The father of psychological RL, B.F. Skinner, would test hungry rats. They would run around their pen and when they hit a lever, some food dropped out. After the rats experimented some more they realize that taking the action of pushing the level, in the state of being next to the lever, leads to the reward of food. Likewise, with a self-driving car algorithm, learning how to drive from point A to point B results in a reward (taxi fare gets paid), and so that reward reinforces good driving behavior.

Discussing the Nuances of Reinforcement Learning and Self-Driving Cars

One problem with the highlighted approach is that by random guessing, you almost assuredly would never arrive at a solution for driving without getting into accidents from point A to point B. So, you need intermediate rewards like a reward for moving forward between the lane markings on a road. Another way to improve performance before the dangerous consequences of testing out in the real world is to train the algorithm in simulation first.

Waymo spent more than 20 billion hours in simulation. Additionally, you can teach the algorithm how to drive by showing it videos of good drivers-in particular what all the self-driving cameras, lidar, and high-definition (HD) maps, should see when operated by a good driver. This is called imitation learning. In this case, in conjunction with obtaining the reward, the software attempts to maximize the likelihood of being in the states that the driver was in. By recording good drivers, you can provide training data for self-driving software without risking lives in the real world.

There are additional reasons why you would expect RL to work better than control. First, your vision systems, radar systems, LIDAR systems all likely use deep learning to turn the radio waves into statistics to get an idea of the situation the car is in. If you use an RL approach, you can optimize the model end-to-end. What this means is that your vision system will be optimized to provide the statistics that help the car drive as effectively as possible. This is not doable with a control system. Your vision system will still be a neural network, but your control system is not. Thus you have to separately optimize your neural network to give what you think are good statistics, and then optimize your control system to do the best it can with the statistics provided. But the learning algorithm can't provide feedback to the vision model on what statistics are best to use. In RL, your driving algorithm can provide this feedback so that the whole system is optimized together.

Another reason one could expect RL to be better was that deep learning worked on everything. In 2012, Alexnet outperformed all other models on the ImageNet challenge and from that year forward deep learning would beat all other models and within a few ears achieve superhuman performance on image classification. Likewise, transformers, rebranded as large language models, have superhuman performance, and can pass the Turing test on many natural language tasks. AlphaFold essentially solved certain areas of the field of protein folding. Likewise even in RL, you have AlphaGo, which outperformed even the top Go players.

Over time, however, there was something more difficult about using reinforcement learning in the real non-gamified world. AlphaStar is a reinforcement algorithm trained to beat top Starcraft players. Starcraft is a real-time strategy video game. In the blog post, DeepMind reveals that each agent is trained on around 200 years of Starcraft gameplay, which could be done using a large amount of compute. DeepMind also trained many agents meaning that potentially 1000s of years of computation time were necessary to build this algorithm. The rules of Starcraft are defined by software that you can simulate. You can perhaps play a year's worth of games in a few days on a GPU. However, for self-driving cars, you have to spend a large amount of time training in the real world, which unlike in software can't be sped up 5 or 6 orders of magnitude. You can't build simulations of driving environments as effectively, because simulations cannot match real-world physics well enough so models trained in simulation don't degrade significantly in performance when put into the real world. This is called the sim2real gap.

Unlike the other fields mentioned that ultimately could be modeled extremely well with reinforcement learning, RL in the real world is still frustratingly difficult. No one has introduced a model, or algorithm, that is able to attain superhuman performance in the real world with reasonable amounts of training data. Humans learn how to drive a car after 70 hours of experience. Based on the number of test cases of self-driving auto companies, RL probably requires five or six magnitudes of experience if not more. Waymo has logged 20 million miles on public roads. Assuming driving at 60 miles per hour, that comes out to 333333 hours of driving or 4700x more hours than a human needs. This does not include the 20 billion miles logged in simulation.

For whatever reason, RL is both exceedingly slow to converge and difficult to get to work on an algorithmic side. There are often many hyperparameters, essential tunable parameters that can't be optimized with standard tools. Unless you get these parameters just right, your model will not converge. The bearishness of RL is so palpable, OpenAI laid off its whole robotics team to focus on large language models. However, it's really the only game in town with self-driving cars if we want to be able to get fully autonomous driving.

The 6 Levels of Driving Automation

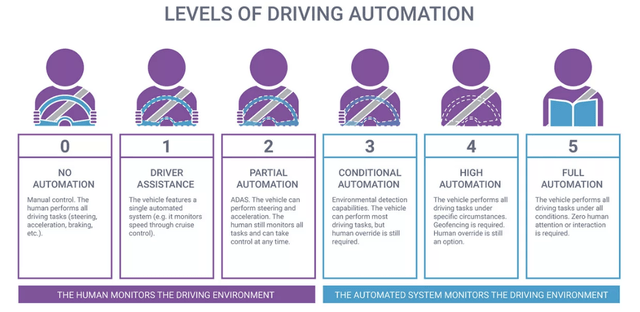

Moving from the algorithms to product quality, the Society of Automotive Engineers provides 6 levels of driving automation, ranging from 0 (no automation) to 5 (full automation).

Six Levels of Automation (Synopsys)

Among the big players, Cruise, Waymo, and Apollo are both at level 4 automation, Mobileye more recently is testing level 4 cars, and Tesla is still testing level 2 automation. The caveat is that Cruise, Waymo, Apollo, and Mobileye all operate in an operation design domain (ODD). Since these companies are reliant on high-definition maps of the streets they travel and still have issues operating in high-risk areas (ie stop and go traffic), these cars can only operate in specific areas where those high-definition maps are charted. Tesla on the other hand doesn't operate with these safeguards and the benefits that come with them, so its level of automation is lower.

Tesla's Autopilot

In 2014, before knowing how hard it would be to get RL to work, Tesla made its famous decision not to use lidar, radar, or high-definition maps, and to work only with computer vision. At the time, it seemed like a good idea: humans can drive with only vision and data scientists assumed that deep learning was going to match human performance on most tasks if you had enough data. While it's true that machine learning has lived up to that reputation, the only area where it couldn't be said to be true was in RL. Ultimately this would put TSLA at a huge computational disadvantage compared to the companies that use lidar etc.

Another consideration was the high cost of lidar, which would make it infeasible to sell to consumers. Lidar could cost as much as $75000 at that time. However recent estimates have suggested lidar can now be sold for $500. This was another miscalculation by TSLA. Far from deep learning putting TSLA autopilot on par with the systems that gathered more information, TSLA needed more data to keep up with the other players.

I suspect this was one reason for the aggressive rollout of TLSA autopilot and the assurances that the product was safer than it was. When data to train a self-driving car to human standards could take billions of hours of driving time, TSLA gambled with lives to encourage more people to provide training data for the company.

Among insiders, TSLA is known as a terrible ambassador for self-driving tech. The Washington Post discovered that TSLA autopilot was involved in 736 crashes since 2019 with 17 deaths. 11 of these deaths happened since May 2022 as autopilot was more broadly rolled out. As evidence of TSLA's aggressiveness, TSLA made up 736 of the 807 automation-related crashes, with other companies practicing extremely conservative autonomous driving rollouts.

The problem with self-driving cars in general, and perhaps TSLA's autopilot bad record, is that anytime an autopilot leads to a crash, this becomes front page news, while a drunk driver-induced fatality hardly makes the local paper. Dara Khosrowshahi, CEO of UBER, makes this point on a podcast. When discussing Uber's divestiture of its self-driving car business, he points out there are 40000 fatalities a year on the roads. Even if self-driving cars cuts that by two magnitudes, that still means there will be on average one death per day, and because this comes from autonomous driving, more attention will be paid to it. This is an argument for TSLA being more careful than it should otherwise be, because the whole industry suffers when TSLAs autopilot gets into another accident.

The Rest of the Market

Now, Tesla's autonomous driving is reasonably dangerous, given the disconnect between what the company projects and its accident history. However, more responsible companies like Cruise or Waymo, have also borne the brunt of regulatory attention when it seems likely that their automation is saving lives. Waymo reported 2 accidents in a million miles, neither of which caused an injury-and also seemed to be not the Waymo car's fault. National statistics average 3 accidents per million miles. Now it's unrealistic to expect 0 accidents per million miles, especially when human drivers could be responsible for crashing into a Waymo car. This illustrates that Waymo in its ODD, with a human taking the wheel in extreme circumstances, could be as safe as a human driver.

Now even on an accident basis, Cruise and Waymo could be safer than humans, they could still behave problematically and not adhere to the norms of the road. After Cruise and Waymo rolled out their paid robotaxi service in San Francisco, there were reports of both crashes and videos of autonomous cars not "understanding" the right thing to do. It's hard to tell if autonomous driving is held to a higher standard than human drivers, or if these vehicles are still not as good as the average driver. However, these companies need real-world environment to improve their driving.

Likewise, other players are testing self-driving in other areas. MBLY is testing its Level 4 Autonomous Driving in Detroit and the company will roll out robotaxis in Germany and Israel, where they already have cars in test driving. Apollo aims to build out the largest ODD zone for its level 4 robotaxis in the world-focused in Wuhan, Chongqing, and Beijing. Apollo is the market leader in China and will have regulatory advantages over non-Chinese companies.

Business Sector Analysis

The self-driving car business is extremely capital-intensive from a research development perspective. Unlike other software companies, with LIDAR, radar, and high-definition mapping, it will also have lower than SaaS gross margins when sold to the mass market. Due to these dynamics, it's not surprising that there are many failures like for example Argo AI. However, the total addressable market, or TAM, for this business is large as the problematic economics in the R&D phase build a moat for companies that are able to achieve fully autonomous or even driver-assisted self-driving tech. Global automotive sales are roughly $3 trillion.

Taking 10% of the entire sector for sale price of the autonomous driving feature comes out to $300 billion size. Takeaway and ride-hailing are expected to be 1 trillion dollars in size by 2030. Taking a 20% share in that business comes out to $500 billion in combined auto and services TAM. There are around 4-5 market leaders across the globe. Even at 50% of total TAM, split among the best-performing incumbents, companies could make $50 billion each, which is enough to cover total research costs in at most 2-3 years (factoring in the cost of goods and services and operating costs). Companies would have to scale up to that kind of presence, but when the industry is mature even one year of profits could pay back all the research and development to get to fully autonomous driving.

But with limited revenue currently, these businesses have to deal with the huge research burden even if compared to the total profits of a mature operator is quite reasonable. The reason for the immense research costs is due to the data-hungry nature of the reinforcement learning algorithm. One can get a boost by training the self-driving car algorithm in simulations, but, as was mentioned above, policies in the simulation degrade in accuracy when implemented in the real world. It is comparatively easy to build a level 2 system with a driver that is fully alert. When something that the model can't handle occurs, the system just alerts the driver. With only 18 million in funding raised, comma.ai demonstrated the ease that a level 2 system can be implemented.

The issue with self-driving cars is in the long tail of edge cases the model must be able to handle to be able to compete without any human intervention. These edge cases are rare but there are millions of different "rare" situations that a human can handle but software cannot without past data guiding it. This is why Waymo already has 20 million miles on the road and still can't commercialize level 5 autonomous driving even with ODD restrictions and the ability to refer to a high-definition map of the road. Additionally, regulators are going to want self-driving cars that are demonstrably safer than a human driver before allowing mass production. All these things mean that in order to design self-driving software, fleets of cars with lidar and radar, have to be built--drivers have to drive cars, engineers have to build the software, and high-definition maps of the area of operation need to be created.

While the TAM of the industry is large, there are significant moats that prevent challenger start-ups from building competing self-driving software. First off from a regulatory framework, allowing self-driving cars to test their capability in your city is an unpopular decision for politicians. How much harder would it be to allow start-ups to test in your city when there already are 2-3 incumbents that have fully self-driving capability? Additionally, Waymo has 700 self-driving cars in 2021. In the beginning, all these cars need drivers as they drive the 20 million miles Waymo has accumulated, which is currently still not enough for level 5 autonomous driving--add to this engineering and software costs and the cost to build this tech before seeing reasonable revenue is daunting. Ultimately, the companies that succeed with level 5 autonomous driving or even just level 2+ advanced driver assistance systems (ADAS) like TSLA and MBLY will have limited competition even for their more basic tech.

MBLY is the best positioned. As a separate company, it can sell to both robotaxis and OEMs. Waymo could do the same thing, but the company is concentrating on the robotaxi market. Other self-driving companies are owned by OEMs, and would likely use the product to increase the value of their cars on the market. As an OEM subsidiary, it would not make as much economic sense to sell their tech to robotaxis, as you are designing the tech to improve the attractiveness of the cars you sell. This is what puts MBLY in the best position. They have sold their tech to OEM manufacturers, which allows them to accumulate more data. However since they aren't affiliated with an OEM, they aren't disincentivized to sell their tech to robotaxis and other outside bidders like trucking companies.

From a valuation perspective, MBLY is somewhat steep. If they succeed in designing fully self-driving cars and capture a reasonable TAM, it looks like a reasonable valuation. But nothing guarantees they will get there and a low valuation provides a margin of safety. Buying GM and getting Cruise as a sum of its parts looks interesting. Cruise is valued at $20-30 billion, GM owns 80% of it. It is losing money, however, even with this hit on their income statement GM is earning almost 10 billion in profit and trading at a 50-billion-dollar valuation. On the other hand, the $10 billion in profit is a cyclical peak in earnings and the company has another 100 billion in debt.

A safer play would be to invest in BIDU. The company also has a market cap of 50 billion dollars. It is one of the tech giants in China, although has somewhat lost favor with the Chinese market and outside investors, as reflected in the company's 15x P/E. There are clearly corporate governance and regulatory concerns with investing in China which is a reason for the valuation. At the same time, BIDU has no debt and has a search and cloud business growing in mid-double digits.

Apollo is the market leader in China, where policy will probably prevent the other autonomous driving leaders in the West from entering in the market. Without analyzing BIDU's other businesses, this might be a safe and reasonable valuation to buy ownership in autonomous vehicle software. For more information on the BIDU investment thesis, here is another Seeking Alpha pitch more focused on BIDU and less on the self-driving industry.

Conclusion

If one wants to bet on the self-driving business, BIDU would probably be the best play. Despite TSLA's bluster, they have made serious strategic missteps when building their autopilot system. To make up ground, they have to double down on their tech to encourage more people to use and push the boundary of their tech. As a result, the accidents give TSLA and the autonomous driving industry a bad name.

Compared to MBLY, Waymo, and Cruise, Tesla, Inc. tech is significantly behind its competitors. Examining MBLY, Waymo, and Cruise, the self-driving industry is hugely research and development intensive. At the same time, the TAM is large and the companies that can win out in commercializing level 5 autonomous driving will command a huge market.

This article was written by

Analyst’s Disclosure: I/we have no stock, option or similar derivative position in any of the companies mentioned, but may initiate a beneficial Short position through short-selling of the stock, or purchase of put options or similar derivatives in TSLA over the next 72 hours. I wrote this article myself, and it expresses my own opinions. I am not receiving compensation for it (other than from Seeking Alpha). I have no business relationship with any company whose stock is mentioned in this article.

Seeking Alpha's Disclosure: Past performance is no guarantee of future results. No recommendation or advice is being given as to whether any investment is suitable for a particular investor. Any views or opinions expressed above may not reflect those of Seeking Alpha as a whole. Seeking Alpha is not a licensed securities dealer, broker or US investment adviser or investment bank. Our analysts are third party authors that include both professional investors and individual investors who may not be licensed or certified by any institute or regulatory body.