Software programmes or platforms that employ artificial intelligence (AI) methods to carry out particular activities or functions are referred to as AI tools. These tools often use machine learning algorithms, natural language processing, computer vision, or other AI techniques to analyse data, extract insights, automate procedures, and generate predictions or recommendations. It used to be that artificial intelligence was viewed with suspicion or even trepidation, and scary cinematic representations like Terminator haven’t helped change that perception. Nonetheless, technology is now more widely available and practical than ever before and used in personal and professional settings. Below mentioned are the top 10 best AI tools with their specifications.

You may even wonder what artificial intelligence is. The ability of computer systems to carry out activities that typically need human intellect is known as artificial intelligence software. Understanding and incorporating these programmes into your life will simplify life and help you better understand who you are and the world around you.

We’re constantly seeking methods to operate more efficiently in the modern world. That’s why we’ve compiled this comprehensive list of the top 10 best AI tools that may help developers, people, and companies.

Top 10 best AI tools

1. Scikit Learn

A well-liked open-source machine learning package for the Python programming language is scikit-learn, sometimes called sklearn. It offers a comprehensive range of machine learning tools, including supervised and unsupervised learning methods and tools for preparing data, choosing models, and evaluating them. Because NumPy, SciPy, and matplotlib are built on top of other well-known Python libraries, like SciPy, SciPy, and SciPy, it is simple to connect Scikit-learn with other data analysis tools in the Python ecosystem. Scikit-salient is amongst the top 10 best AI tools learning’s characteristics include the following:

- A range of supervised learning techniques includes support vector machines, decision trees, random forests, and logistic regression.

- A range of unsupervised learning techniques, including dimensionality reduction techniques like PCA and t-SNE and clustering techniques like K-Means and DBSCAN.

- Cross-validation and grid search are two tools for model selection that can assist users in selecting the optimum model and hyperparameters for their data.

- Scaling, normalisation, and feature selection help users prepare their data for machine learning.

- Common machine learning tasks like classification, regression, and clustering have simple user interfaces.

- Since Scikit-learn has such a broad and active user and contribution base, it is often updated and enhanced.

Credits: Wikipedia

2. TensorFlow

The Google Brain Team created the open-source TensorFlow software library for machine learning and artificial intelligence. TensorFlow is created using CUDA, C++, and Python. It was first made available in 2015 and has become one of the top 10 best AI tools extensively used and well-liked deep learning libraries.

Deep neural network-based calculations may be performed on enormous data sets using TensorFlow, one of its primary characteristics. Many tasks, including speech recognition, natural language processing, and picture recognition, are performed using these networks. The Google Brain Team created the open-source TensorFlow software library for machine learning and artificial intelligence. It was first made available in 2015 and has become one of the most extensively used and well-liked deep learning libraries. TensorFlow is created using CUDA, C++, and Python.

Deep neural network-based calculations may be performed on enormous data sets using TensorFlow, one of its primary characteristics. Many tasks, including speech recognition, natural language processing, and picture recognition, are performed using these networks.

TensorFlow’s salient characteristics include the following:

- Keras’s high-level API offers a user-friendly interface for creating and training deep neural networks.

- A scalable and adaptable infrastructure for model deployment and distributed training across many devices.

- A large selection of pre-made models and tools for applications like image recognition and natural language processing.

- Python, C++, and Java are among the programming languages supported.

- Support for GPUs, which speeds up model inference and training

3. PyTorch

Creating and training deep neural networks is the main function of the open-source machine learning framework PyTorch. It is built on the Torch library, a scientific computing framework for the Lua programming language, and was created by Facebook’s artificial intelligence research team.

With a straightforward and adaptable API, PyTorch is user-friendly and intuitive, making it simple to develop and experiment with neural networks. Moreover, it provides a dynamic computational graph, allowing you to instantly alter the network’s topology and view the consequences without compiling your code.

PyTorch has several important features, including:

- Dynamic computational graph: The dynamic computational graph in PyTorch enables you to define your network as you go, facilitating speedy experimentation and iteration.

- Simple debugging: PyTorch offers a variety of effective debugging tools, such as interactive debugging using the PyTorch debugger and the ability to explore the architecture of your network with programmes like TensorBoard.

- PyTorch can use GPUs to significantly speed up the training process, enabling you to train more prominent and more complicated models in less time.

- Distributed training is supported by PyTorch, allowing you to train your model on several GPUs or even computers simultaneously.

- Automatic differentiation is supported by PyTorch natively, making it simple to compute gradients and build models using backpropagation.

4. CNTK

Microsoft created CNTK (Microsoft Cognitive Toolkit), an open-source deep learning framework. Thanks to their efficient and scalable architecture, large neural networks may be trained on several GPUs or multiple computers.

One or more of CNTK’s salient characteristics is:

- Excellent performance: You can train models rapidly and effectively using CNTK since it is well-tuned for CPU and GPU architectures.

- Simple CNTK’s API makes creating and training deep neural networks simple.

- Support for numerous languages: CNTK is compatible with Python, C++, and C#, among other programming languages.

- Training that is dispersed over several GPUs or computers is supported by CNTK, making it simple to scale up your training procedure.

- Deep learning primitives: CNTK offers a collection of low-level primitives for designing unique neural network topologies and a set of high-level abstractions for creating and training neural networks.

- Pretrained models: CNTK has a library of pre-trained models that may be used for various applications, such as voice and picture recognition.

5. Caffe

The Berkeley Vision and Learning Center created the open-source deep learning framework Caffe (BVLC). With an emphasis on convolutional neural networks (CNNs) and other deep learning architectures frequently utilised in computer vision applications, it is created to be highly effective and adaptable.

One or more of Caffe’s salient characteristics is:

- Efficiency: You can train and deploy models fast and effectively using Caffe since it is well-tuned for CPU and GPU architectures.

- Flexibility: Convolutional neural networks (CNNs), recurrent neural networks (RNNs), and other network topologies are supported by Caffe.

- Caffe features an easy-to-use interface that makes it straightforward to train and evaluate deep neural networks.

- Pretrained models: Caffe has a library of pre-trained models that you may utilise for various tasks, such as segmenting and recognising images.

- Big community: Caffe has a sizable and active user and contributor community, making it simple to get help and resources.

- Visualization tools: Caffe offers visualisation tools to assist you in understanding your network’s performance and troubleshooting any possible problems.

6. Apache MXNet

An open-source deep learning framework called Apache MXNet creates and trains neural networks. Although the Apache Software Foundation currently owns it, Amazon Web Services first built it.

MXNet may be used on a range of platforms, including CPUs, GPUs, and distributed systems, and it supports several programming languages, including Python, R, Julia, Java, C++, and Scala.

One of MXNet’s distinguishing characteristics is its capacity to scale well over several workstations and devices, enabling shorter training periods and handling bigger datasets. It accomplishes this by using a dynamic computation graph to optimise the distribution of computing resources.

Moreover, various pre-built neural network layers and models are available from MXNet and tools for data preparation and visualisation. Moreover, MXNet offers transfer learning, enabling users to modify previously learned models for their unique needs.

Overall, Apache MXNet is a robust and adaptable deep learning framework that academics and developers can utilise for a range of applications.

7. Keras

Another name in the list of top 10 best AI tools is Python-based Keras is an open-source framework for deep learning. It was created by François Chollet and is currently being looked after by the Google TensorFlow team.

Keras offers a high-level API that makes it simple for users to create, train, and assess neural networks. TensorFlow, Microsoft Cognitive Toolkit, and Theano are just a few of the many backends it supports, giving consumers the option to select the one that best suits their needs.

The simplicity and use of Keras are two of its key characteristics. With little to no coding required, users can easily design and test various neural network architectures thanks to its user-friendly API. Also, Keras offers a huge selection of pre-made layers and models that are easily adaptable for certain needs.

Keras supports both conventional neural networks and more complex structures, including deep belief networks, convolutional neural networks, and recurrent neural networks (DBNs). Moreover, Keras enables users to quickly integrate transfer learning, enabling them to employ previously learned models for their own unique applications.

Keras also provides powerful tools for data preprocessing and visualization, making it a versatile and comprehensive deep-learning framework. With its user-friendly API and extensive documentation, Keras is an ideal framework for researchers and developers looking to quickly prototype and deploy neural network models.

In addition to offering strong tools for preprocessing and visualising data, Keras is a flexible and all-inclusive deep learning framework. Keras is the perfect platform for academics and developers wishing to quickly prototype and deploy neural network models because of its user-friendly API and thorough documentation.

8. OpenNN

For creating and training neural networks, OpenNN (Open Neural Networks Library) is an open-source C++ software library. It was created by the University of the Basque Country’s Artificial Intelligence and Pattern Recognition Group in Spain.

For creating and training several kinds of neural networks, such as feedforward neural networks, recurrent neural networks, and convolutional neural networks, OpenNN offers a variety of techniques and tools. Deep learning, reinforcement learning, and unsupervised learning are also supported.

The simplicity and adaptability of OpenNN are two of its key characteristics that makes it one of the top 10 best AI tools. Users may simply construct, train, and assess neural networks using its user-friendly API with little to no code needed. Moreover, OpenNN offers a huge selection of pre-built models and datasets that are simple to adapt for certain needs.

With OpenNN’s support for both CPU and GPU processing, training durations can be shortened, and bigger datasets can be handled. Moreover, it offers tools for optimising hyperparameters, enabling users to fine-tune their models for the best results.

A complete deep learning framework, OpenNN also provides tools for data preparation and visualisation. OpenNN is a great platform for academics and developers who want to quickly prototype and deploy neural network models because of its user-friendly API and comprehensive documentation.

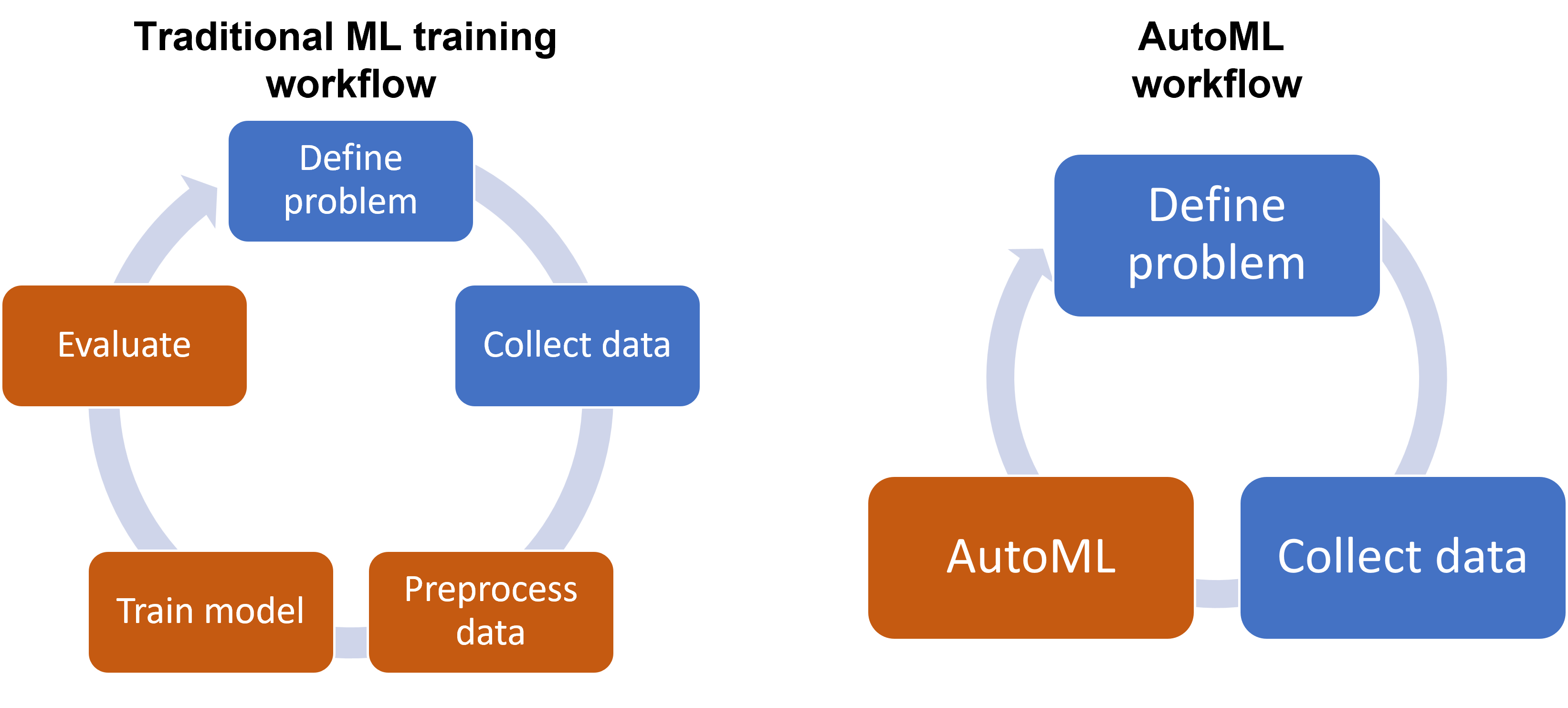

9. AutoML

The term “Automated Machine Learning” (AutoML) describes the use of machine learning techniques to automate the development and deployment of machine learning models. By making machine learning easier to understand for developers and researchers without a background in the field, AutoML intends to increase its accessibility.

To automate machine learning, a variety of methods, including hyperparameter optimization, model selection, and neural architecture search, are used. Users can quickly construct and deploy machine learning models by eliminating the need to manually adjust and optimise each component of the model.

The ability of AutoML to drastically minimise the time and effort needed to develop and deploy machine learning models is one of its key advantages. By automating the hyperparameter tuning and model selection processes, AutoML may also enhance machine learning models’ performance.

Time series analysis, natural language processing, and picture classification are just a few of the many tasks for which AutoML may be used. It may also be used in a variety of machine learning models, such as support vector machines, decision trees, and deep learning models.

10. H2O

A platform for creating and deploying machine learning models is called H2O. It was created by the H2O.ai data science team and is written in Java with R, Python, and SQL APIs.

For creating and training several kinds of machine learning models, such as deep learning models, gradient boosting machines, and generalised linear models, H2O offers a variety of techniques and tools. Moreover, it provides tools for model interpretation, visualisation, and data preparation.

The performance and scalability of H2O are two of its key characteristics. Large models may be trained on computer clusters thanks to its support for distributed computing. Moreover, H2O offers GPU acceleration, which may dramatically shorten deep learning model training periods.

User-friendly web-based interface H2O Flow from H2O makes creating, training, and applying machine learning models simple. Various visualisations and tools are available in H2O Flow for data exploration and model interpretation.

H2O also has automated machine learning (AutoML) features that can automatically choose the optimum algorithm and hyperparameters for a given dataset, making it simpler for users without a background in machine learning to develop and deploy models. All the features of H2O makes it one of the top 10 best AI tools in the market.