Along with aiding Facebook in its moderation decisions, the Oversight Board also made key recommendations such as undertaking a health misinformation assessment in relation to the COVID-19 pandemic.

Facebook on Thursday put up its first Oversight Board quarterly update. This is the first such document issued by Facebook describing its relations with the quasi-judicial authority that hears appeals from Facebook users who are unsatisfied with moderation decisions. Facebook admitted in a blog post accompanying the report that it may have bitten off more than it can chew (or at least, more than it can chew quickly):

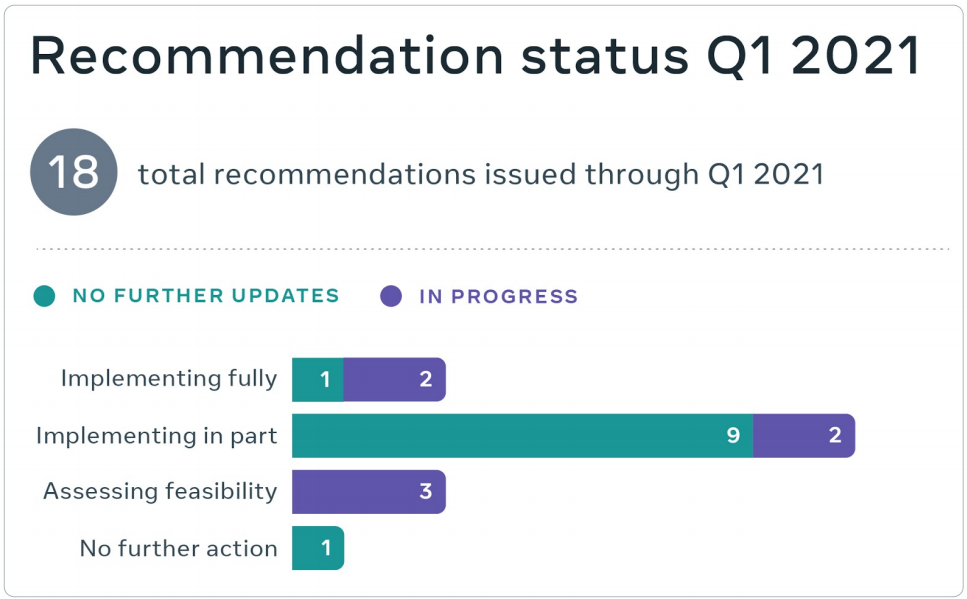

In the first quarter of 2021, the board issued 18 recommendations in six cases. We are implementing fully or in part 14 recommendations, still assessing the feasibility of implementing three, and taking no action on one. The size and scope of the board’s recommendations go beyond the policy guidance that we first anticipated when we set up the board, and several require multi-month or multi-year investments. The board’s recommendations touch on how we enforce our policies, how we inform users of actions we’ve taken and what they can do about it, and additional transparency reporting. — Jennifer Broxmeyer, Director, Content Governance, Facebook

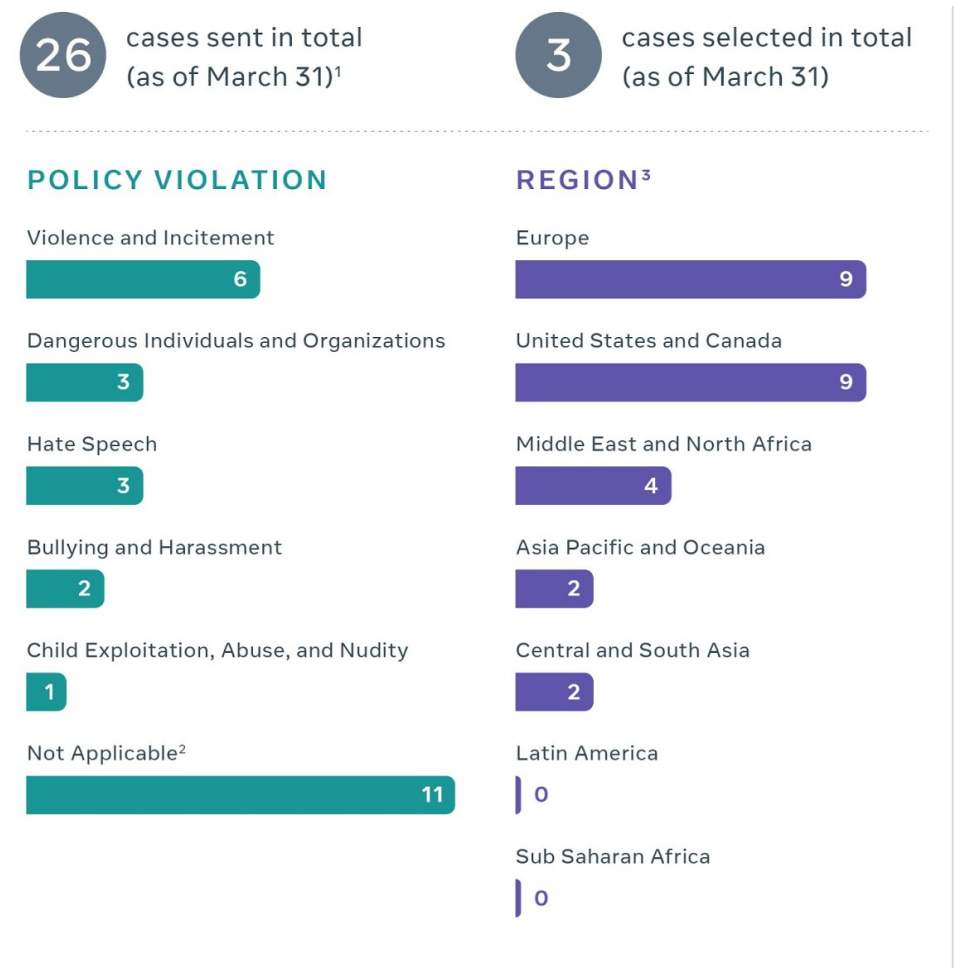

26 cases referred to the Board, 3 accepted

The Oversight Board, in addition to hearing appeals from the public, also gets submissions from Facebook for challenging decisions that the company has already acted on but would like more guidance on how to approach similar situations in the future. Under this framework, the company referred 26 cases, and only three were accepted: “a case about supposed COVID-19 cures; a case about a veiled threat based on religious beliefs; and a case about the decision to indefinitely suspend former US President Donald Trump’s account,” the company said.

A breakdown of the 26 cases Facebook referred to the Oversight Board, by policy and region. Source: Facebook

Status of recommendations

Along with decisions, the Oversight Board issues “non-binding recommendations” that Facebook can implement at their discretion. Here is a list of the recommendations, sorted by status:

- Implementing/implemented fully: These are the recommendations Facebook is implementing fully or has already implemented fully.

- Improve automated detection: “Improve the automated detection of images with text-overlay to ensure that posts raising awareness of breast cancer symptoms [on Facebook and Instagram] are not wrongly flagged for review.”

- Adult nudity guidelines clarity: “Revise the Instagram Community Guidelines around adult nudity. Clarify that the Instagram Community Guidelines are interpreted in line with the Facebook Community Standards, and where there are inconsistencies, the latter take precedence.” Facebook said that for the most part, its content policies for Instagram and Facebook are identical and that it would make this clear.

- Appealability: “Ensure users can appeal decisions taken by automated systems to human review when their content is found to have violated Facebook’s Community Standard on Adult Nudity and Sexual Activity.” Facebook offers appeal options for such cases.

- Implemented/implementing in part: These are recommendations that Facebook is implementing partially.

- Facebook & Instagram consistency: “When communicating to users about how they violated policies, be clear about the relationship between the Instagram Community Guidelines and Facebook Community Standards.” Facebook said that “We will continue to work toward consistency between Facebook and Instagram and provide

updates within the next few months.” - Specificity of reasoning: “Go beyond the Community Standard that Facebook is enforcing, and add more specifics about what part of the policy they violated.” Facebook said that it would do what it can, based on technological feasibility.

- User notifications: “Ensure that users are always notified of the Community Standards Facebook is enforcing.” This recommendation followed a mistake in Facebook’s systems that led to users not getting notified of a community standard action. This has been fixed, Facebook said.

- Dangerous individuals and organisations: “Explain and provide examples of the application of key terms used in the Dangerous Individuals and Organizations policy. These should align with the definitions used in Facebook’s Internal Implementation Standards.” Facebook said that it would make this policy clearer. “We commit to adding language to the Dangerous Individuals and Organizations Community Standard clearly explaining our intent requirements for this policy. We also commit to increasing transparency around definitions of “praise,” “support,” and “representation,” the company said.

- Health misinformation clarity: “Clarify the Community Standards with respect to health misinformation, particularly with regard to COVID-19. Facebook should set out a clear and accessible Community Standard on health misinformation, consolidating and clarifying existing rules in one place.” Facebook said that it had implemented this partly by creating a Help Center article.

- Transparency on health misinformation: “Facebook should 1) publish its range of enforcement options within the Community Standards, ranking these options from most to least intrusive based on how they infringe freedom of expression, 2) explain what factors, including evidence-based criteria, the platform will use in selecting the least intrusive option when enforcing its Community Standards to protect public health, and 3) make clear within the Community Standards what enforcement option applies to each rule.” Facebook said that it would launch a Transparency Center in coming weeks to address these concerns.

- Health misinformation assessment: “To ensure enforcement measures on health misinformation represent the least intrusive means of protecting public health, Facebook should conduct an assessment of its existing range of tools to deal with health misinformation and consider the potential for development of further tools that are less intrusive than content removals.” Facebook said it would keep building tools to get authoritative information on health to users.

- Transparency on COVID actions: “Publish a transparency report on how the Community Standards have been enforced during the COVID-19 global health crisis.” Facebook said it would evaluate ways to disclose this information, but did not commit to a report per se.

- On India incitement: “Provide users with additional information regarding the scope and enforcement of this Community Standard [on veiled threats of violence]. Enforcement criteria should be public and align with Facebook’s internal Implementation Standards. Specifically, Facebook’s criteria should address intent, the identity of the user and audience, and context.” Facebook said it would add language to this policy to make it clearer.

- Facebook & Instagram consistency: “When communicating to users about how they violated policies, be clear about the relationship between the Instagram Community Guidelines and Facebook Community Standards.” Facebook said that “We will continue to work toward consistency between Facebook and Instagram and provide

- Assessing feasibility: These are cases where Facebook is studying how feasible it is to implement a recommendation. No action has been taken here yet.

- Automation transparency: “Inform users when automation is used to take enforcement action against their content, including accessible descriptions of what this means.”

- Automated decision transparency: “Expand transparency reporting to disclose data on the number of automated removal decisions, and the proportion of those decisions subsequently reversed following human review.”

- List dangerous individuals and organisations: “Provide a public list of the organizations and individuals designated “dangerous” under the Dangerous Individuals and Organizations Community Standard.”

Also read