Apple has launched a series of technologies that make it easier and faster for developers to create new apps. SwiftUI is a key development framework that makes user interfaces (UI) easier than ever before. ARKit 3, RealityKit and Reality Composer are some advanced tools which are designed to make it easier for developers to create compelling AR experiences for consumer and business apps. The new tools and APIs simplify the process of bringing iPad apps to Mac. Updates to Core ML and Create ML allow for more powerful and streamlined on-device machine learning apps.

SwiftUI

SwiftUI provides a powerful and intuitive new UI framework for building sophisticated app UIs. Using simple, easy-to-understand declarative code, developers can create stunning, full-featured user interfaces complete with smooth animations.

SwiftUI saves the time of developers by providing a huge amount of automatic functionality including interface layout, Dark Mode, Accessibility, right-to-left language support, and internationalization. And because SwiftUI is the same API built into iOS, iPadOS, macOS, watchOS and tvOS, developers can easily build native apps across all Apple platforms.

Xcode 11 Brings SwiftUI to Life

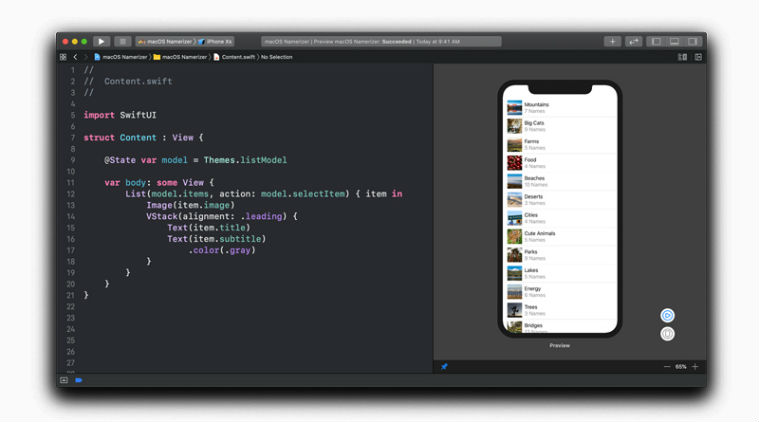

A new graphical UI design tool built into Xcode 11 makes it easy for UI designers to quickly assemble a UI with SwiftUI, without having to write any code. Swift code is automatically generated and when this code is modified, the changes to the UI instantly appear in the visual design tool.

Developers can see automatic, real-time previews of how the UI will look and behave as they assemble, test and refine their code. The ability to fluidly move between graphical design and writing code makes UI development more fun and efficient and makes it possible for software developers and UI designers to collaborate more closely.

The previews can run directly on the connected Apple devices including iPhone, iPad, iPod touch, Apple Watch, and Apple TV, allowing developers to see how an app responds to Multi-Touch or works with the camera and onboard sensors live, as the interface is being built.

Augmented Reality

ARKit 3 puts people at the centre of augmented reality (AR). With Motion Capture, developers can integrate people’s movement into their app, and with People Occlusion, AR content will show up naturally in front of or behind people to enable more immersive AR experiences and fun green screen-like applications. ARKit 3 also enables the front camera to track up to three faces. It also enables collaborative sessions, which make it even faster to jump into a shared AR experience.

RealityKit was built from the ground up for AR. It features a photorealistic rendering, as well as environment mapping and support for camera effects like noise and motion blur, making virtual content nearly indistinguishable from reality. RealityKit also features incredible animation, physics and spatial audio, and developers can harness the capabilities of RealityKit with the new RealityKit Swift API. Reality Composer, a powerful new app for iOS, iPadOS and Mac, lets developers easily prototype and produce AR experiences with no prior 3D experience.

Easier to Bring iPad Apps to Mac

New tools and APIs make it easier to bring iPad apps to Mac. With Xcode, developers can open an existing iPad project and simply check a single box to automatically add fundamental Mac and windowing features, and adapt platform-unique elements like touch controls to keyboard and mouse thereby providing a huge head start on building a native Mac version of their app.

Mac and iPad apps share the same project and source code, so any changes made to the code translate to both the iPadOS and macOS versions of the app, saving developers time and resources by allowing one team to work on both versions of their app.

Also read: Apple Mac Pro with Intel Xeon processor and a new Pro Display XDR

Core ML and Create ML

Core ML 3 supports the acceleration of more types of advanced, real-time machine learning models. With over 100 model layers now supported with Core ML, apps can use models to deliver experiences that deeply understand the vision, natural language and speech. And for the first time, developers can update machine learning models on-device using model personalisation. This technique gives developers the opportunity to provide personalised features without compromising on user privacy.

With the Create ML, a dedicated app for machine learning development, developers can build machine learning models without writing code. Multiple-model training with different datasets can be used with new types of models like object detection, activity and sound classification.