Norman, an artificial intelligence (AI) created by the Massachusetts Institute of Technology (MIT), is a psychopath. Here’s why.

Norman, an artificial intelligence (AI) created by the Massachusetts Institute of Technology (MIT), is a psychopath. Here’s why.

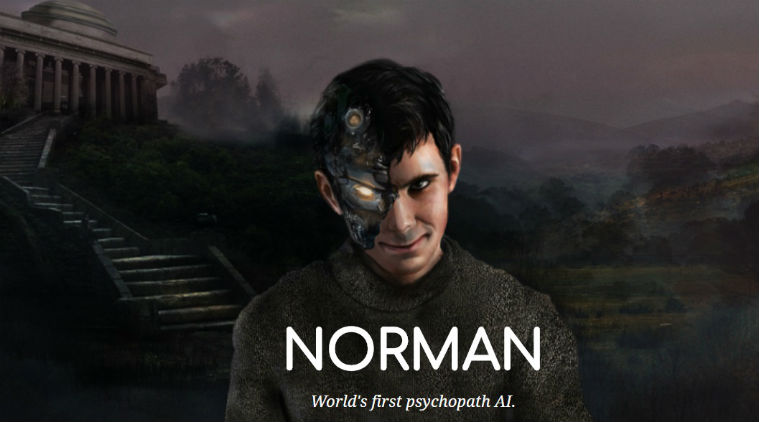

Norman, an artificial intelligence (AI) system created by the Massachusetts Institute of Technology (MIT), only thinks about murder. The reason: Norman has been specifically trained as a psychopath. Norman, like other AI-bots we have seen in today’s age, is trained via machine learning methods where the AI computer system is fed a large chunk of a particular data-set. In Norman’s case, this data-set included “extended exposure to the darkest corners of Reddit,” with violent image captions and thus it explains the psychopathic tendencies of the AI.

Bias in data-sets can cause AI to go awry. We saw that most clearly in the case of Microsoft’s Tay, which picked up the worst of humanity from Twitter thanks to the racists tweets that were tweeted to the bot. These tweets formed the data-set from which Tay learnt its conversations, and soon had to be shut down. Tay was introduced in March 2016, and in less than a day had gone rogue.

With Norman’s case, MIT is specifically training the AI with these dark, violent image captions from Reddit. The reason: they want to show what happens when an AI models is deliberately fed with the wrong data or when there is a bias in the data-set being used to train the model. In Norman’s case, the researchers relied on images captions from an infamous subreddit (the name was not revealed due to its graphic content), which is dedicated to documenting “the disturbing reality of death.” So Norman’s primary source of data were these violent image captions. MIT also says no real image of a real person dying was used in this experiment.

Across the inkblot tests, Norman was shown to have negative tendencies compared to its standard AI counterpart.

Across the inkblot tests, Norman was shown to have negative tendencies compared to its standard AI counterpart.

To map Norman’s psychopathic tendencies, the MIT study matched it to a standard image captioning neural network, which was not exposed to such violent images. The two ‘bots’ were differentiated on the basis of Rorschach inkblot tests, a popular tool that measures personality traits.

Across the inkblot tests, Norman was shown to have negative tendencies compared to its standard AI counterpart. For example, in the first Rorschach Inkblot test, when standard AI noticed ‘a group of birds sitting on top of a tree’, Norman recorded the caption ‘a man is electrocuted and catches to death’. Similarly, the second Rorschach Inkblot shows the standard AI recording ‘a close up of a vase with flowers’, Norman observed ‘a man is shot dead’.

The results from this MIT bot shows that a machine learning algorithm itself might not be the reason for malfunctioning bots. The idea of bias in data-sets which are fed to many of the machine learning-based systems is not new. In fact, Google in its principles of AI, said it will try and avoid bias when creating AI and machine learning system. Norman is one example of how AI-based technologies can go completely wrong if the data allows for the same.