Apple’s focus on the image isn’t just about marketing, it’s about unleashing whole new ways of interacting with devices as the company quietly moves to twin machine intelligence with computer vision in a host of new ways.

What has Apple done?

WWDC saw numerous OS improvements with this in mind. These enhancements extend across most of its platforms. Think about:

- Core ML: Apple’s new machine learning framework that lets developers build computer vision machine learning features inside apps using very little code. Supported features include face tracking, face detection, landmarks, text detection, rectangle detection, barcode detection, object tracking, and image registration.

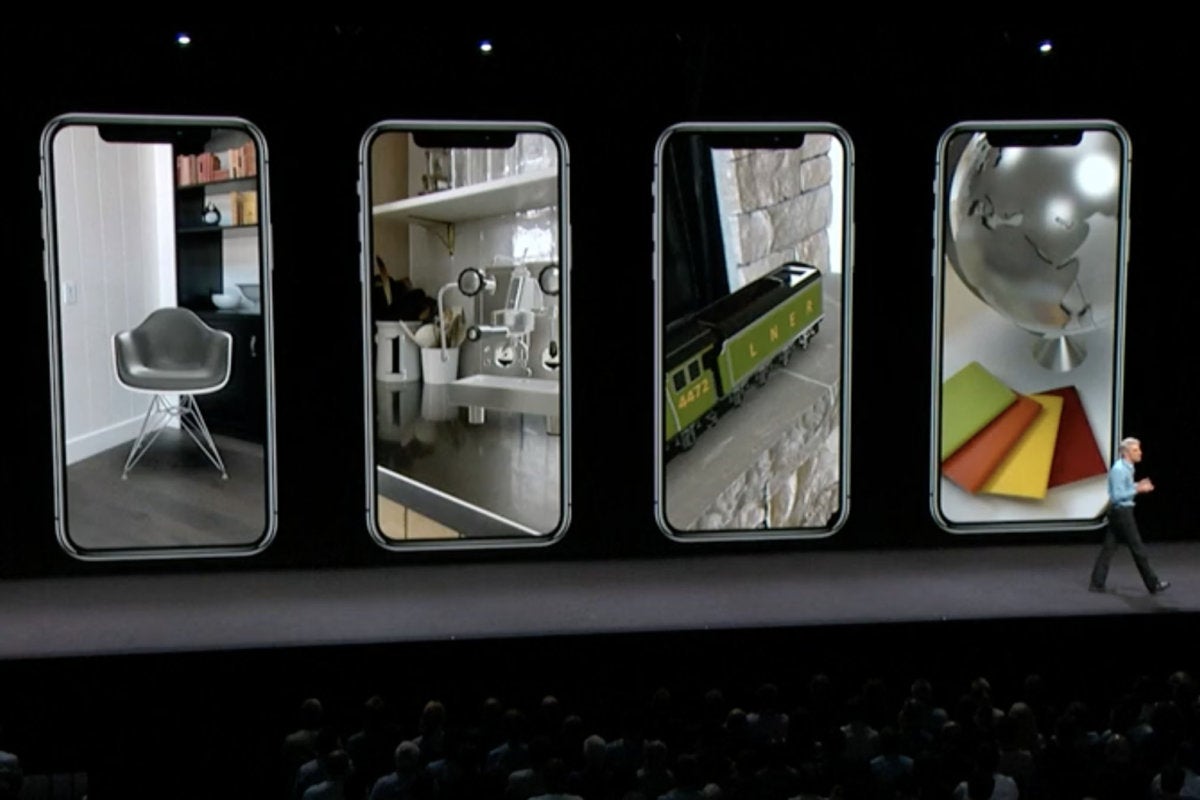

- ARKit 2: The capacity to create shared AR experiences, and to allow others to also witness events taking place in AR space, opens up other opportunities to twin the virtual and real worlds.

- USDZ: Apple’s newly-revealed Universal Scene Description (USDZ) file format allows AR objects to be shared. You can embed USDZ items in a web page, or share them by mail. You’ll see this evolve for use across retail and elsewhere.

- Memoji:More than what they seem, Memoji’s capacity to respond to your facial movements reflects Apple’s gradual move to explore emotion sensing. Will your personal avatar one day represent you in Group FaceTime VR chat?

- Photos:Apple has boosted the app with the capacity not just to identify all kinds of objects in your images, but also developing a talent to recommend ways to improve an image and/or suggesting new image collections based on what Siri learns you like. CoreML and improved Siri mean Google Lens must be in Apple’s sights.

- Measure:Apple’s new ARKit measurement appis useful, but it also means that AR objects can begin to offer a much more sophisticated ability to scale, and provides the camera sensor (and thus the AI) with more accurate information pertaining to distance and size.

Other features, such as Continuity Camera (which lets you use your iPhone to scan an item for use by your Mac), and, of course, Siri’s new Shortcuts feature just makes it easier for third-party developers to contribute to this attempt.

All of these improvements work with Apple’s existing technologies that enhance image capture, recognition and analysis. Apple started using deep learning for face detection in iOS 10, now its Vision feature allows image recognition, image registration, and general feature tracking.

I’d argue that Apple’s ambition for these solutions is a lot broader than Amazon’s focus on shopping in Alexa Look, or other vendor’s interest in collecting, categorizing and selling your user data as they gather it.

What can we expect?

I’ve just returned from WWDC where I got to know Apple’s new operating system, spoke with developers and attended several fascinating sessions.

While I was there I was looking for a product that really illustrates how Apple’s machine learning and computer vision platforms might make a difference to daily lives. I think Apple Design Award winner, Triton Sponge, is a perfect illustration of this.

Seven years in development and now in use in U.S. operating theaters, Triton Sponge measures how much blood has been collected by surgical sponges during operations.

It does this using the iPad’s camera and machine learning to figure out the quantity of lost blood (the system is smart enough to recognise fakes and duplicates). It’s like Textgrabber for liquids. I spoke with company founder and CEO, Siddarth Satish, who told me one of the reasons he began developing the app was to help prevent the needless deaths of the 300,000 women who die of blood loss when giving birth.

It's a great example of the potential of Apple, AI and computer vision in healthcare. Think of it this way, if your iPhone can figure out how much blood there is inside a medical sponge just by looking at a picture then might Apple’s highly sophisticated TrueDepth camera be able to monitor respiratory, pulse, and body temperature just by monitoring fluctuations in the skin on your face?

I believe that Satish and his team are developing profoundly transformational health-focused computer vision solutions, and that these are great examples to show the potential Apple now offers its developer community.

More than a gesture

There are also implications for user interfaces. It is already possible using mass market technology to create user interfaces that can be controlled by gesture.

Since WWDC, one developer has built an app that lets you control your iPhone with your eyes.

Control your iPhone with your eyes. Just look at a button to select it and blink to press. Powered by ARKit 2. #ARKit #ARKit2 #WWDC #iOS pic.twitter.com/ow8TwEkC8J

— Matt Moss (@thefuturematt) June 7, 2018

Think of this in combination with Apple’s existing accessibility technologies and empowering solutions such as Shortcuts and the smarter Siri – and this Apple recruitment ad.

Google+? If you use social media and happen to be a Google+ user, why not join AppleHolic's Kool Aid Corner community and get involved with the conversation as we pursue the spirit of the New Model Apple?

Got a story? Please drop me a line via Twitter and let me know. I'd like it if you chose to follow me there so I can let you know about new articles I publish and reports I find.